Trends | 7 predictions for data and analytics in 2022

13.02.2022 | 11 min ReadCategory: Data Market | Tags: #data lakehouse, #dataops

Do you have a full overview of everything needed to become more data-driven? Here are 7 trends you should know about across the categories of architecture, developer teams, data flow, DataOps and governance, data science and analytics, delivery model and value creation from data.

1. Architecture

Get excited, data lakehouses are coming!

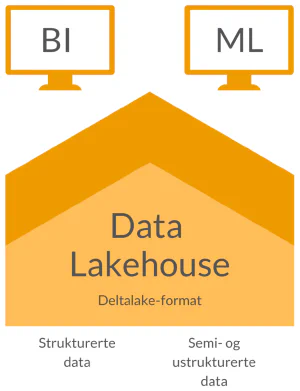

Data lakes (aka Hadoop technology, aka Big Data) were much talked about for a period, and I myself have been a product owner for one. Data lakes allow you to store very large amounts of data affordably, and can give great flexibility to developers and data scientists to create more advanced data products. With Spark we also got the ability to scale the processing of large data volumes. However, the data needs to be further processed to provide value, and the user base remained small because data extraction and processing required significant technical expertise.

Data warehouses have been around for many years, and here too I have been a project manager and product owner. The great advantage of data warehouses is that the data is prepared for use through shared data models. A large part of the workload of compiling and cleansing data and standardising business terminology is done in advance, so that for users there is a much shorter path to self-service. On the other hand, it takes a long time to agree and develop this, and the flexibility to quickly create something new is perceived as limited.

Some organisations even have multiple data lakes and multiple data warehouses. The data lakehouse model, where we have a data lake with raw data and logical data warehouse layers on top, helps minimise data movement. The Delta Lake file format means we also get logical database operations on data lake files (ACID, allowing us to both modify and delete data - and we need to be able to do that to comply with GDPR requirements, for example). Technologies like Databricks and Snowflake, running on cloud infrastructure, make it much easier to get this spinning. Finally, we can use our energy to create value from data, rather than working on provisioning hardware, configuring for performance or carrying out upgrades.

WHAT’S HAPPENING IN 2022?

Data lakehouse architectures gain a foothold

Data lakehouses are now being implemented, including in Norway. And yes, we at Glitni have first-hand experience!

What this means for you can follow two main tracks:

- Don’t have anything yet? Then there’s not much to think about, if you have both some data and many use cases.

- Lots of technical debt and limited documentation? It takes time to migrate the old, and you often inherit a great deal of the technical debt regardless. We recommend assessing which parts of the solution should be rebuilt, using a gradual approach.

2. Developer teams

Cloud first - and data platform architects and developers will be in high demand!

Virtually no one establishes new data platforms on their own infrastructure. The 10 percent who don’t choose Google Cloud, AWS or Azure either have large data centres of their own, too much data that needs processing, or strict regulatory constraints that prevent the use of cloud solutions. The rest of us use cloud first.

You thought the world was simpler in the cloud? It is, but the platform in the cloud should ideally solve multiple types of use cases beyond historical reporting. New roles for the data platform are emerging. A data lakehouse in the cloud neither establishes itself, operates itself, nor gains new capabilities by itself. Unlike many other off-the-shelf applications, the bulk of the effort lies in how the data in the platform is ingested, stored and processed.

We need dedicated platform architects and developers. Note that these are not data engineers with a new name - this is an entirely separate role. However, it doesn’t hurt that several members of the data platform team have a background in ELT development.

WHAT’S HAPPENING IN 2022?

Platform architects and developers are becoming increasingly important, and there are still few of them

- The platform team needs a good understanding of business requirements that must be translated into shared capabilities, DevOps/DataOps/MLOps, infrastructure, integration methods and interfaces, data flow (ELT, Pub/Sub), modelling, monitoring and data testing (data observability), and not least security and access management.

- The super-person who knows all this inside out naturally doesn’t exist, so build complementary expertise in the team. Make good use of the consultancy market to establish the team, the platform and good processes and routines. At the same time, it’s important to ensure you build internal competence along the way!

3. Data flow

ELT, Pub/Sub and Reverse ELT: Yes please!

Although ETL (Extract, Transform, Load) has served well over recent decades, ELT (you guessed it: Extract, Load, Transform) has now taken over. It makes more sense to process data where the data resides, and then we can of course process it in all the ways the use cases require (analytics, reporting, etc.).

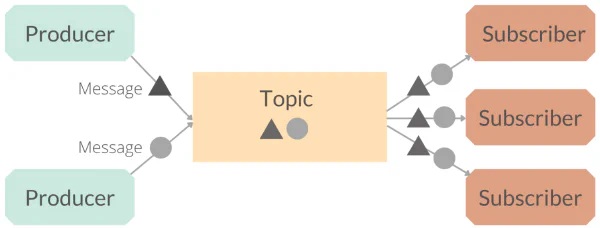

Pub/Sub integration can be labour-intensive, but frees up data for all possible use in near real-time, including in environments outside the data platform. The data stream essentially becomes microservices of data products that different solutions can subscribe to. Kafka is still the leading technology platform, but in Norway relatively few have extensive experience with this technology. The expertise being developed now is emerging from general development environments, not from the traditional ETL-heavy data warehouse world.

Reverse ELT is a fairly fresh concept, relating to a subset of data integration linked to Master Data Management. In short: there are now tools that push changes in, for example, product attributes into solutions that need them. Perhaps we’ll hear more about this in the years ahead, or it will simply become a natural feature within established MDM or data integration tools.

WHAT’S HAPPENING IN 2022?

Yes please, we need more ways to get hold of data

A trend that has become clear to me is that we typically say “yes please” to all ways of getting data into a data platform. We have some architecture patterns we prefer (because they’re cheapest/simplest/best to monitor, etc.), and some we use occasionally. A combination of ELT and Pub/Sub is probably a fairly uncontroversial prediction.

This means at least three things:

- Data engineers must either learn more tools and architecture patterns, or collaborate with different development teams to get the data in.

- Integration architects must master data architecture to a greater extent.

- It becomes important to understand where the data comes from, how it has been processed, and what assumptions can be made when interpreting the data. This means that tools and capabilities such as data catalogues and data lineage become more important.

4. DataOps and Data Governance

Data observability + what’s happening with data catalogues?

There are many +Ops concepts. A couple of years ago came MLOps - i.e. operations and further development of machine learning pipelines. And now we’re talking about DataOps. Nobody has quite landed on the definition of the concept, and the practical consequences, beyond the fact that it’s about bringing principles related to DevOps+Agile from software development to meet the data world.

WHAT’S HAPPENING IN 2022?

There are two areas that stand out as trends for 2022 within DataOps and Data Governance:

Data observability is something data engineers are starting to talk about, but few are truly making it work

- Data observability is about automating the monitoring of data flows, with emphasis on data lineage (what has happened to the data up to a point) and data quality (various logical checks showing whether the data has changed character beyond what we expect). Both tools and methods are maturing over time, but we’re still at the starting line.

Lots of data, many users, and many different use cases require the data catalogue to be put in place

- Data catalogues require a lot from people and processes to agree on definitions, ownership and routines. The practical consequence is that data catalogue initiatives are scaled down to the most central data domains and kept to a minimum. And that’s fine. And perhaps it’s also the case that we’ll have data catalogue capabilities spread across several tools. What do we want? Automated generation and sharing of metadata throughout the entire data flow, available to everyone who needs it, with opportunities for social interaction so we can learn from each other how the data should be used.

5. Data Science and analytics

Long live the business analysts!

We’re out to solve business problems and use data to support operational processes. My assertion is that most organisations aren’t ready to adopt the most advanced methods either. As long as they don’t have control of basic processes related to data governance and using data to answer business questions like “what happened”/“why did it happen”, then scaling up the organisation’s collective data competence will have relatively much greater value than optimising narrow use cases.

WHAT’S HAPPENING IN 2022?

Business analysts first

- Data science as a term is calming down a bit, and business analysts are taking the spotlight. We can usually develop business analysts ourselves, while productive data scientists are extremely difficult to recruit.

Increased awareness of competence building? Yes please!

- Cultivating the collective ability to use data is important, and for many there is still great potential for value creation from data here. Internal competence programmes continue to be carried out to increase skills, but unfortunately most will still be focused on how to use Excel/Power BI/Tableau and other self-service tools, rather than using data for problem-solving.

6. Delivery model

Decentralised ownership spreads further, inspired by Data Mesh

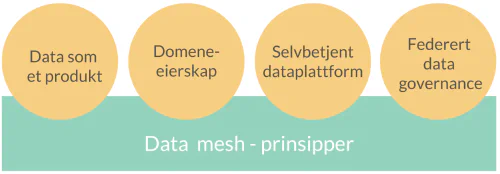

If you work in the data world, you’ve probably come across the data mesh concept some time ago. The concept was first described in two blog posts by Zhamak Dehghani in 2019: How to Move Beyond a Monolithic Data Lake to a Distributed Data Mesh and Data Mesh Principles and Logical Architecture. The core idea is that organisations can become more data-driven by moving from central data warehouses and data lakes to domain-oriented data ownership and architecture, driven by self-service analytics and a set of shared guidelines. The weakness of current organisation is that we create bottlenecks in the central link that a data platform represents. Data Mesh seeks to break free from a centralised architecture by decentralising both ownership and responsibility for developing data products.

The idea has gained momentum, and a company like Zalando is now actively sharing how they are implementing Data Mesh. What makes it all a bit confusing is that Data Mesh is not something you can buy. It’s more of a concept for organisational design, consisting of pieces that need to be assembled like a puzzle.

WHAT’S HAPPENING IN 2022?

Decentralised ownership is creeping in

Decentralised ownership of datasets, reports and analytics models is spreading further, but we’re not being fanatical. It takes longer with data flows and data domains (think “Customer” or “Product” as domains). And it’s not certain everyone should decentralise everything - data mesh as a design concept doesn’t suit all types of organisations - especially not the small and medium-sized ones.

True data mesh very few have yet, since most organisations need to significantly scale up their analytical and technical competence to be able to drive domain-based ownership and architecture. And many are beginning to realise that it’s challenging to separate ownership and delivery models solely for data products, when the rest of the digital ecosystem should also be viewed in the same way.

It may also be worth debating whether you need to decentralise the architecture (we can achieve a lot with technologies like Snowflake, Databricks and dbt on a central platform) - isn’t it really through the organisation that the value of Data Mesh is realised?

7. From data to value

(Almost) everyone realises they need to become data-driven

Are Norwegian organisations becoming more data-driven? Perhaps - there are probably several who have conducted surveys on this. Maturation takes time, and doesn’t happen by chance. Fortunately, we have gained highly visible international - and a few Norwegian - examples showing that data is the great differentiator between being an industry leader and being at the back of the pack.

We no longer need to spend much time arguing that data can provide value. Instead, the conversations are primarily about concretising how much value, where we have the greatest potential, and what’s needed to get there. Most have done something, and are in the process of doing more.

WHAT’S HAPPENING IN 2022?

More data-driven processes - and perhaps we can start talking more about the future?

We talk less about advanced analytics, and more about becoming data-driven through all processes. We simply need to get fact-based decisions, be able to look further ahead through prediction, and automate where we have repetitive tasks that follow standard patterns. And speaking of prediction, I would really like more leaders to ask their business analysts whether we can try to look at what might happen in the future (next week, next month and next year).

Process improvement work is (finally) becoming more data-driven

Personally, I hope that process improvement, where Lean has been the buzzword for decades, can in time merge with data-driven automation and problem-solving. The large consultancies now have both Lean consultants and data consultants, but the coordination and collaboration has not been impressive so far. 2022 must be the year where someone seizes the opportunity, and achieves the first Norwegian success stories that everyone talks about. The gauntlet is thrown, as they said in the old days.