DataOps in Practice

19.06.2023 | 5 min ReadTag: #dataops

In practice, DataOps can be divided into automation, continuous delivery, quality management, and collaboration. We look at what this actually means.

The Four Main Areas of DataOps

Automation

Automation is an important part of DataOps and is used in many areas to improve efficiency and reduce the risk of errors:

- Data collection and data transformation: Automated scripts and tools can retrieve data from different sources, identify errors in data, and transform data into a format suitable for analysis.

- Testing and validation: Automated testing processes help ensure data quality and can include everything from syntax validation to more complex logical tests. An increasing number of organizations are adopting data observability tools such as Great Expectations.

- Orchestration: Automation is used to coordinate and manage workflows and data flows. Orchestration tools such as Airflow can automate a series of processes, including data loading, data cleansing, data transformation, and data validation, in the correct order and at the right time.

- Monitoring: Monitoring tools can automatically track and report on performance, errors, and other important metrics in real time. This makes it possible to quickly detect and fix problems.

- Model development and deployment: CI/CD tools such as Git and Azure DevOps provide control over the further development of models and transformations between environments. Automated processes can also be used to train, validate, and deploy machine learning models.

- Documentation: Automation can simplify the documentation process in several ways. It can be used to generate metadata, providing better understanding of the data. It can also be used to create code and API documentation directly from the source code, ensure up-to-date changelogs, and track the data lifecycle (data lineage).

- Data platform and data products as code: A prerequisite for automation is that as much as possible is written as code, including infrastructure and service configuration through tools such as Terraform (Infrastructure as Code - IaC), transformation logic through tools such as dbt and Delta Live Tables (Data as Code - DaC), and logic related to advanced analytics through tools such as Jupyter (Notebooks).

Continuous Delivery

CI/CD stands for “Continuous Integration” and “Continuous Delivery.” It involves practices that ensure you can deploy changes to data and data applications in a reliable and safe manner on an ongoing basis.

- Continuous Integration (CI): This is a practice where developers frequently (several times daily) integrate their individual code changes back into the main codebase (main branch). Each integration is then automatically tested to detect errors as early as possible.

- Continuous Delivery (CD): This is an extension of CI, where the new code changes are prepared for deployment to test or production after passing tests. Changes can be reviewed manually through code reviews and often also automatically.

Quality Management

Quality management involves a range of techniques to ensure that the data being used is accurate, reliable, and useful, and that key capabilities function as intended:

- Automated testing: Automated testing is used to continuously check the quality of the data and transformations processed in the CI/CD pipeline, and to verify that they comply with expected criteria.

- Quality metrics: Quality metrics or KPIs are used to quantify the quality of data when it is in production. This area is called “data observability” and is rapidly gaining traction as a standalone component within data platforms.

- Monitoring: Data flows and systems are continuously monitored to detect any problems that arise, and include routines for quickly initiating troubleshooting (often through the developers who created the logic in the first place).

- Data catalogs: Data catalogs can be used to document metadata about the data, including quality information and access control. Data lineage tools are used to track the origin and transformations of data, which helps maintain and understand quality throughout the entire data lifecycle.

- Dialogue about business needs: Quality management also involves close collaboration between different team members - for example data engineers, data analysts, and users - to ensure that the data and solutions meet business needs.

Collaboration

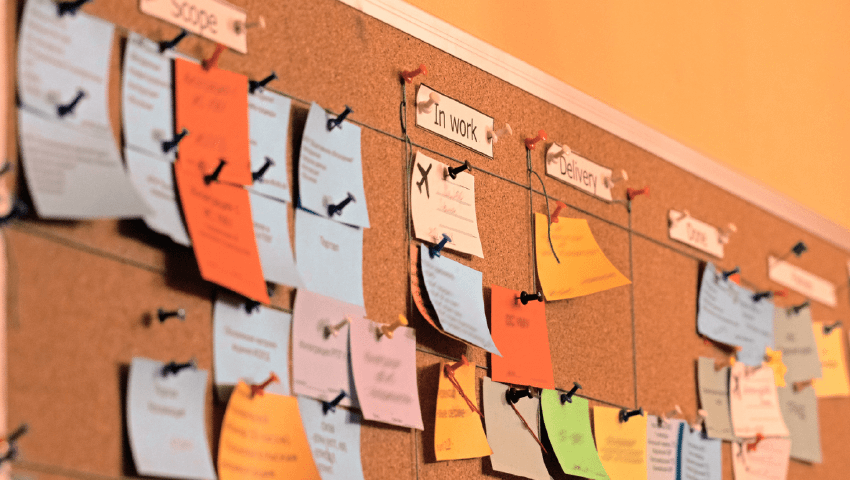

Instead of separating development and operational responsibilities between different teams, DataOps focuses on integrating these functions to achieve faster delivery of data and data products. In general, we achieve increased efficiency when there is full transparency around development and backlog prioritization, and when this is done collectively within the various delivery teams.

Here are some key principles related to collaboration:

- Data deliveries that provide business value: Cross-functional teams owned and led by business resources work together to deliver what is needed to achieve the desired effects from data deliveries. This requires the development of data interfaces, transformations, datasets, ML algorithms, and more, but also the involvement of users, training, and process changes.

- Data platform teams that deliver great user and developer experiences: A dedicated data platform team collaborates closely with its customers - users and developers - to deliver the best possible services. Data, given access requirements and similar, should be quickly available for utilization and development.

Trends in DataOps

Several trends are driving the future of DataOps, including integration, augmentation, and observability.

1. Fusion with Other Data Disciplines

DataOps will increasingly need to interact with and support related practices connected to data and the application of data. Gartner has identified MLOps, ModelOps, and PlatformOps as complementary approaches for managing different ways of using data. MLOps is aimed at the development and versioning of machine learning, and ModelOps focuses on modeling, training, experimentation, and monitoring. Gartner characterizes PlatformOps as a comprehensive AI orchestration platform that includes DataOps, MLOps, ModelOps, and DevOps.

2. Augmented DataOps

AI is beginning to help manage and orchestrate the data infrastructure itself. Data catalogs are evolving into augmented data catalogs and analytics into augmented analytics with various AI-based capabilities. Similar techniques will gradually be applied to all other aspects of the DataOps pipeline, e.g., automated code review and automated documentation.

3. Data Observability

The DevOps community has for many years used performance management tools strengthened by observability infrastructure to help find and prioritize problems with applications. Vendors such as Acceldata, Monte Carlo, Precisely, Soda, and Unravel are developing comparable tools for data observability that focus on the data infrastructure itself. DataOps tools will likely increasingly use data observability data to optimize DataOps flows.