Guide to Data Contracts

13.07.2025 | 10 min ReadCategory: Data Governance | Tags: #Podcast, #Data Contracts

Data contracts have become a hot topic in modern data architecture in recent years. It is not about law, but about something much more practical and technical; how to ensure that data produced and consumed by different teams actually works together -- over time.

A data contract functions as an API for data

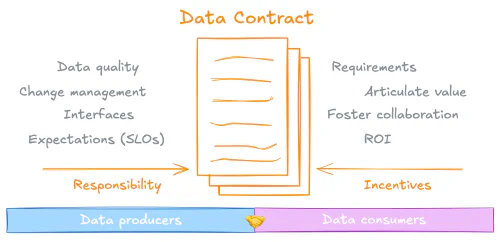

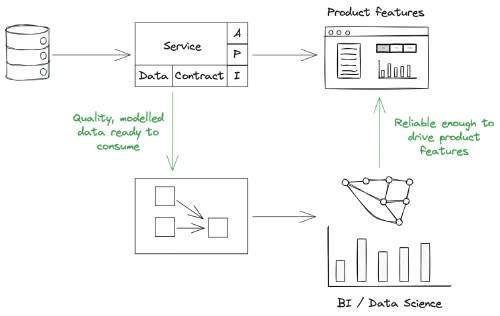

As platforms become more decentralized and more teams produce their own data products, the need for clear and technically validatable agreements between data owners and data consumers grows. A data contract functions as an API for data – a specification of structure, quality and expectations that both producers and consumers adhere to.

In short: data contracts reduce surprises, increase quality and build trust across teams and technology.

The problem data contracts help solve

Data can be business-critical – but the rules of the game are often unclear.

Fewer errors in the data flow

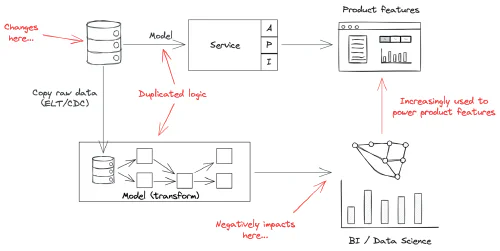

Data flows between systems and people – and this often happens without explicit agreements. In practice, this means that when a developer changes a column in a database, it can cause a report to fail, a machine learning model to produce errors, or a dashboard to stop working.

Such errors do not occur because people make mistakes on purpose – but because the dependencies are hidden. No one has communicated what is allowed and what is not. And no one catches that something has gone wrong – until it goes wrong.

Data contracts make these dependencies explicit, and provide tools to test that they hold. This makes it possible to build solutions that are both self-service and reliable.

Better support for data sharing

Public and cross-sector requirements also set higher expectations for structured data sharing:

- NOU – Med lov skal data deles (With law shall data be shared) sets requirements for making public data available, inspired by the Open Data Directive and the Data Governance Act.

- PSD2 requires API-based interfaces that open new markets and increase security in sharing.

Data contracts are a practical response to such requirements – and can be seen as a technical implementation of the principles behind the regulation.

Increased value in larger ecosystems

Data contracts must be understandable and implementable. There is much to learn from other standardization efforts that have proven their value over time:

- HL7 (healthcare): Very detailed, but too complex in practice

- EHF (commerce/finance): Simple enough to work, widely adopted

These are examples where data contracts provide value across an entire ecosystem. However, we want an implementation of data contracts that resembles EHF more than HL7, where the minimum requirements are eventually standardized for a given use case, data type and/or industry.

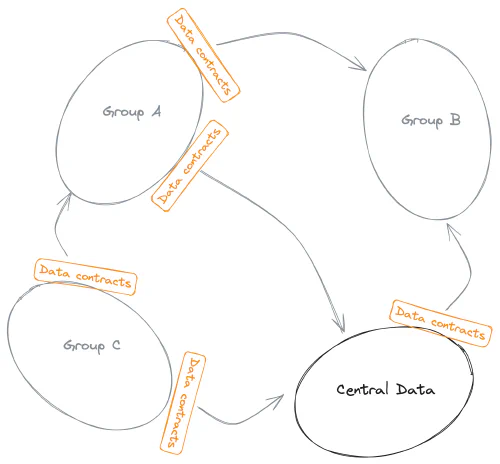

Data contracts and Data Mesh

In a Data Mesh organization, data contracts are a central building block.

Data as a product

In a data mesh-inspired organization, responsibility for data is distributed to domain or product teams. These teams develop their own data products – often in the form of tables, APIs or datasets – that others should be able to use. Data products must therefore have clear properties: standardized, shareable, owned by a team, and managed throughout their lifecycle. And remember: not all data qualifies as data products.

Data products need specifications of properties such as quality, warranty and service, in the same way as physical products:

When you buy an electric scooter, you expect specifications on range, warranty and speed. Without these, the product would be hard to sell and impossible to trust. The same applies to data products – without clear specifications, data consumers quickly lose trust, and value diminishes.

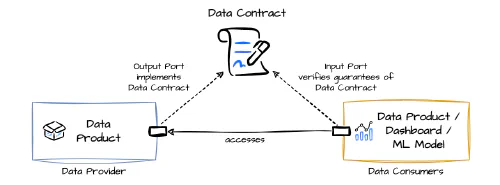

The contract operationalizes the data product

The data contract becomes the connecting link that operationalizes “data as a product.” It specifies exactly what is delivered, quality and frequency (SLA), and is actively used in the CI/CD pipeline and communication between teams. The contract enables the producer team to specify exactly what they promise to deliver – and consumer teams can trust that what they build on top of will not suddenly change.

Examples of data contract usage

Here are three examples of data contract usage:

Example 1: Customer data in the banking and finance sector

A team in a bank delivers customer data as a data product to the risk management department. The data contract specifies:

- Update frequency: every hour, from 08:00 to 18:00.

- Fields: customer_id, credit_risk, loan_amount, status.

- Quality requirements: no duplicates, maximum 0.5% null values.

- Alerting: automatic alerts on breaches, integrated with the bank’s risk management systems.

- Semantic definition: credit risk is calculated based on the customer’s credit score, payment history and external data.

This allows the risk team to quickly trust the data and more rapidly detect and handle risk situations.

Example 2: Power consumption and grid balancing in the energy sector

An energy supplier delivers power consumption data from smart meters as a data product to the grid operator for balancing the power grid. The contract specifies:

- Update frequency: every 5 minutes.

- Fields: meter_id, power_consumption_kWh, timestamp, customer_type.

- Validation rules: only positive values; meter_id must exist in master data.

- Semantics: power_consumption_kWh refers to actual consumption, not estimates.

- Pricing: internal billing based on data volume and update frequency.

- Alerting: automatic notification to operational control systems if values are missing or outside expected ranges.

This enables precise and reliable real-time balancing of the power grid, as well as transparent cost sharing.

Example 3: Machine maintenance in industrial production

A production team delivers sensor data for condition-based machine maintenance to a maintenance team. The contract specifies:

- Update frequency: every 15 minutes.

- Fields: machine_id, temperature, vibration, operating_time.

- Data quality: maximum 1% abnormal values, 100% coverage of critical machines.

- Alerting: automatic alerting directly into maintenance systems when critical values exceed thresholds.

- Semantic definition: vibration and temperature are used to predict machine wear based on defined threshold values.

The maintenance team can thereby effectively plan maintenance before failures occur, reduce downtime and optimize resource utilization.

What does a data contract contain?

Data contracts are a practical application of the principle “governance as code.” They function as contractual interfaces for data, and make it possible to specify and validate expectations for data – preferably as early as possible in the development process. In the best case, the contract is included as an integration test in the CI/CD pipeline, so that errors are detected before code and data are moved to production.

Three key components

Data contracts are a practical application of “governance as code.” A good contract always describes:

- Schema – Structure, columns, data types, formats.

- Business logic – Expected values, null tolerance, ranges and domains.

- SLAs – Update frequency (e.g. SLA1 for critical data every 5 minutes, SLA2 for less critical data daily).

The contract should always be validated programmatically in a CI/CD pipeline. Where they are enforced depends on the organization’s architecture and technology stack, but as a minimum they should be monitored and have a process associated with them if the contract is breached.

The contract as a metadata object

In addition, the contract should have clear:

- ID and version control: A unique identifier for retrieval and reference in pipelines and documentation, as well as a basis for handling changes

- Resource name and namespace: Which specific dataset or data resource the contract applies to. Should be 1:1 (e.g.

vehicle_statusin thetransportdomain). - Documentation: What do the data mean? How are they used? Documentation should be mandatory.

- Owner: The producer has ownership responsibility; the contract is developed together with the most important consumers.

Once the foundation is in place, you can extend with:

- PII classification and privacy requirements

- Compliance rules

- Links to taxonomies and ontologies

- Quality metrics (e.g. in accordance with DQV-AP-NO)

A data contract is not just a specification – it is a test foundation, a governance tool and an important link between people and systems in modern data organization. You can think of the contract as a strengthened variant of a schema – but with semantics, validation, usage context and governance built in.

More about semantics and taxonomy

Data contracts often describe a single entity – e.g. “customer.” But for this to be meaningful, it should be linked to a semantic structure – a taxonomy or concept model.

“Open Data Contracts are required to be well-structured and templated. It defines and logically represents only one single entity to help producers and consumers understand the schema and semantics around it.” – opendatacontract.com

By linking contracts to concepts and taxonomies, you achieve reuse and understanding across teams. “Customer” can then be a type of “personal actor,” and the contract can inherit rules or definitions from there.

Technical implementation in practice

Data contracts should live as code and be possible to validate and test. This can be done with:

YAML/JSON

The contract is described in a machine-readable format. Example in dbt:

models:

- name: transaksjon

columns:

- name: transaksjon_id

tests:

- not_null

- unique

- name: status

tests:

- accepted_values:

values: ["NY", "FERDIG", "ANNULLERT"]

dbt contracts

Dbt supports enforced: true on models, which validates that output matches the contract. This is connected directly into the CI/CD pipeline.

Testing tools

- Great Expectations is used for declarative data tests

- Avro, JSON Schema, Protobuf are used for contracts in APIs and messaging systems

Example workflow

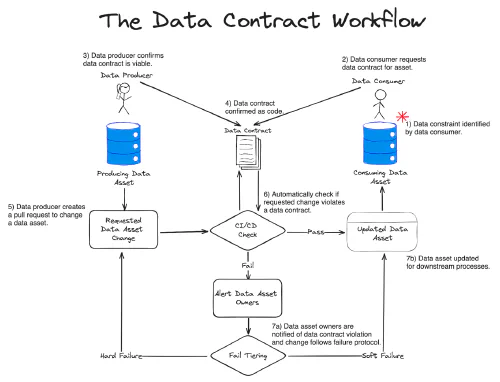

As illustrated in the figure, the workflow for data contracts can consist of the following steps:

- The data consumer identifies a need or a limitation in the data.

- The data consumer requests a data contract for a given data resource.

- The data producer confirms that the data contract is feasible.

- The data contract is confirmed and formalized in code.

- The data producer creates a pull request to change the data resource.

- The change is automatically checked to see if it breaks any existing data contracts.

- The change results in a breach or no breach: a. If the data contract is breached, the owner of the data resource is notified, and the change is handled according to an error protocol. b. If the change does not break the contract, the data resource is updated for further processing.

Pitfalls and anti-patterns

Typical anti-patterns include:

- The contract is just documentation – it is not tested

- Changes happen without versioning – consumers’ use of the data breaks

- No clear owner

A good contract:

- Is machine-readable

- Is testable

- Is versioned

- Has anchored ownership in the producer team

- Has been anchored with the most important consumers

- Has a clear process for change management

Does the producer or consumer own the contract?

Formally, the contract is owned by the producer. It is the team that manages the data product that publishes the interface. But good practice dictates that the contract is developed in collaboration with consumers – preferably in joint meetings or pull requests.

The contract should be precise and stable – and changes must happen through versioning, so that existing consumers continue to function.

Organizational challenges

Implementing data contracts requires organizational anchoring and support from top management. Remember that it takes time and prioritization to put contracts in place – but it is less than the time spent cleaning up error situations.

Here are some tips from the field:

- Start with one team and one data product

- Showcase success stories internally

- Get support from the CDO/data officer from the start

- Use existing tools and formats (e.g. dbt, Terraform, Databricks, Great Expectations)

Summary

Data contracts help organizations create robust, scalable and trustworthy data sharing. It is not hard to get started – but it requires some structure, ownership and tool support.

Start small. Formalize what you are already doing. Then gradually build up technical validations and a culture for sharing and ownership.

Want to learn more?

Visit:

- Open Data Contract Standard

- DCAT-AP-NO is used for publishing and cataloging data products

- DQV-AP-NO describes data quality, with dimensions such as Accuracy, Completeness, Timeliness, Validity and Lineage

- Read: Andrew Jones: Driving Data Quality with Data Contracts (2023)

- Read: Chad Sanderson, Mark Freeman: Data Contracts (planned Nov. 2025)

You can also listen to the podcast “Datautforskerne”, episode 15 where Safurudin Mahic and Magne Bakkeli discuss data contracts. The episode is available on Spotify, Apple and Acast. Like and subscribe!