Data modelling - hot or not?

27.10.2024 | 7 min ReadCategory: Data Governance | Tags: #Podcast, #Data Modelling, #Organisation

Data modelling has made a strong comeback after spending time in the shadows. This article explores why structuring and standardising data has once again become a critical part of the data ecosystem, and how the information architect helps balance flexibility and control in modern data platforms.

The information architect’s comeback - order and structure are back in fashion!

In the 1990s and 2000s, the information architect was a central figure in the heyday of the data warehouse. During this period, much was about building structured, reliable and centralised data for reporting and analytics. The information architect ensured unified data modelling and harmonisation. An example of this might be data warehouse projects in large enterprises where the information architect ensured that information from various source systems was collected and harmonised, giving the organisation a holistic view of its data.

Then came the data scientists from around 2011 onwards, and data modelling gradually became less popular. Focus shifted towards unstructured and semi-structured data, flexibility and experimentation, where there was less need for the strict structures and standards the information architect has traditionally championed. Data lakes were seen as a way to handle larger data volumes with fewer restrictions, and data scientists took over as the central actors in the data ecosystem. Specific tools such as Hadoop and NoSQL databases made it easier to work with unstructured data, which led to information architects being partly sidelined.

Now, however, we see a renaissance for the information architect role. We have now lived through several years of the data lakehouse era, where we have both unstructured and structured data, in original form as raw data and modelled data for various analytical purposes. The information architect is back, and is once again proving important for balancing the flexibility of data lakes with the need for consistency and control required to support modern data analysis and decision-making. The data lakehouse model combines the advantages of data lakes and data warehouses, making it easier to both experiment and ensure that data is reliable enough for analytical purposes, thus giving the information architect a key role in achieving this balance.

The information architect role is based on established frameworks

Many have experienced the difficulty of merging data from different sources, for example when data from CRM systems and ERP systems needs to be linked together. Different formats and a lack of shared definitions can make this extremely challenging. This is where the information architect comes in. The role is about facilitating data that can be used across dimensions such as time, geography and product, and ensuring shared definitions and standards.

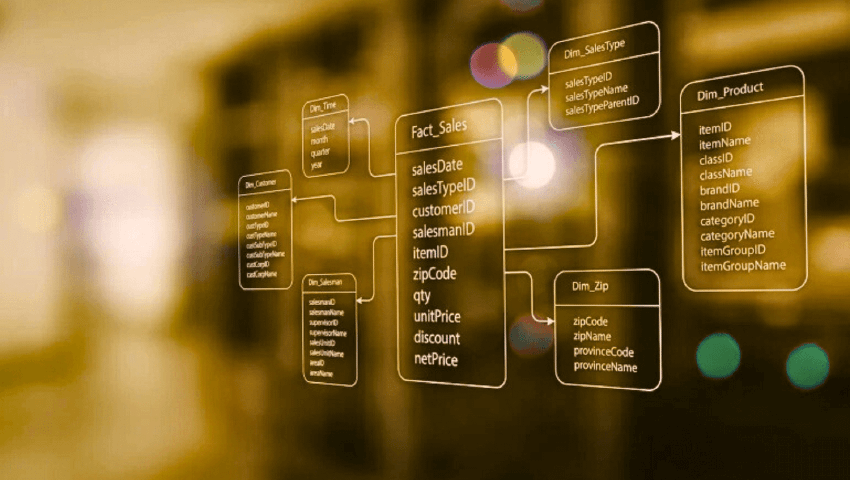

The information architect is responsible for a range of tasks, including data modelling, establishing data standards, ensuring data quality, and designing data contracts. They use tools such as ER modelling, SQL, and data catalogues to ensure good structuring and availability of data. Important techniques include normalisation, dimensional modelling and data integration. Normalisation helps reduce redundancy and improve data quality by organising data into relations, whilst dimensional modelling helps structure data for analytical purposes, making data easier to understand and use in reports and analyses. Their competence spans from technical data knowledge to business understanding, and collaboration with other roles such as Data Engineers, Data Scientists and Enterprise Architects is crucial for success.

The information architect is most involved in the early stages of a data product’s development lifecycle, when data is structured, quality-assured, and made available for further use. This includes tasks such as defining data standards, establishing data quality requirements, and ensuring that the necessary validation rules are in place to catch errors early in the process. The books by Kimball and Inmon in the 1980s and 1990s established the theoretical framework for data modelling, which information architects practise. These frameworks have left deep marks on how we understand normalisation, dimensions and facts, and they have formed the foundation for many of today’s data platforms.

Architects hold the key to making domain division work

The enterprise architect’s role has also evolved in step with shifting technological landscapes. Whilst the information architect focuses specifically on structuring and managing data, the enterprise architect has a broader perspective that includes both technological infrastructure, business processes and strategic alignment. Both roles are necessary to ensure that data and technology support the organisation’s goals in a holistic way.

A concrete challenge in a domain-divided architecture is ensuring that different domains don’t develop overlapping or contradictory data models, which can lead to problems with data quality and interoperability. Here, the information architect plays a decisive role by designing shared data standards and ensuring a holistic architecture that can be used across domains. For example, standardising customer definitions across domains can help ensure that all teams have a shared understanding of what a “customer” is, thereby avoiding inconsistency in analyses and reports.

Today, where distributed responsibility for architecture and data given to functional or process-oriented domains is becoming increasingly common, both the information architect and the enterprise architect must adapt their approaches. The information architect helps ensure effective sharing and scaling of data products with data quality, whilst the enterprise architect ensures that the technological and organisational frameworks enable effective collaboration. The adaptation to distributed data domains requires both roles to work closely together to define clear guidelines, standards and architecture principles that can ensure both scalability and flexibility in the organisation’s data ecosystem across domains.

Information architect vs Data Engineer: Two sides of the same coin

It’s difficult to draw a clear line between information architects and Data Engineers. The latter are often more in-depth on specific data areas and spend more time writing logic/code, whilst the information architect is more high-level and sees the bigger picture. An example of the difference might be that a Data Engineer works on optimising an ELT process to fetch data from a source, whilst the information architect focuses on how that data should be structured and used in a broader business context. Many Data Engineers gradually develop to increasingly take on information architecture roles over time, where more and more time is spent on maintaining the big picture rather than coding.

In a complex data ecosystem, it’s difficult to have one information architect with complete oversight of everything. Instead, data modelling competence can be spread across several people. This scales better, and we avoid bottlenecks. I personally prefer having few dedicated information architects (i.e. who don’t code), and instead having Data Engineers who can model.

| Role | Information Architect | Data Engineer |

|---|---|---|

| Main focus | High-level structuring and modelling of data | Writing logic and code for data collection and processing |

| Tasks | Data modelling, data quality, data standards | Data flow, ETL processes, optimisation |

| Tools | ER modelling, SQL, data catalogues | Python, SQL, Spark, tools for data transformation, orchestration, lineage, etc. |

| Competence | Business understanding, data modelling | Programming, data processing, system integration |

| Collaborators | Data scientists, enterprise architects, data engineers, domain experts/key users | Data scientists, information architects, developers, source system owners, key users |

| Most involved in | Early phase of data product development | All phases of data flow and data product development |

Is data modelling still relevant in the AI era?

AI has revolutionised the way we analyse data, and it can be tempting to think that much of data modelling is no longer necessary. With AI you can identify patterns, extract insights, and build predictive models with minimal human intervention. For example, tools like AutoML and large language models can reduce the need for manual modelling by automating certain aspects of the process. Nevertheless, data modelling has an important role to play, especially when it comes to understanding data and data context.

Data modelling ensures a structured approach to data analysis and makes it easier to quality-assure results. Even with powerful AI tools, we still need human understanding to ensure that data is used correctly and to avoid bias and misinterpretation in models. Models trained on poor or incorrectly structured data can produce unreliable results.

Data modelling may have taken a less visible role in the early stages of data product development, but its value becomes clear when data gains broader use. Experimentation can yield valuable insights, but to ensure consistent results over time, modelling is necessary. When data becomes part of the organisation’s long-term strategy, quality assurance and modelling become critical steps. This is where the information architect plays a decisive role - in ensuring the balance between freedom to experiment and the need for stability and structure.

Although the information architect’s role must adapt to new tools and methods, the core tasks remain relevant. By combining experience, technical skills and business understanding, information architects can not only support effective development of data products, but also ensure that data has the necessary quality and is ready to support AI applications in a reliable way.

Want to learn more?

Listen to the podcast “Datautforskerne” (Data Explorers), episode 8, where Eystein Kleivenes and Magne Bakkeli discuss the return of the information architect. The episode is available on Spotify, Apple and Acast.

Like and subscribe!