GUI vs Code - What Should I Choose for Data Transformation?

26.04.2023 | 8 min ReadCategory: Data Engineering | Tags: #data platform, #data warehouse, #data integration, #data transformation

Data transformation is an important part of the ELT process. GUI and code are two common alternatives for data transformation, but which one should you choose? In this article, we will discuss the pros and cons and help you determine what best suits your needs.

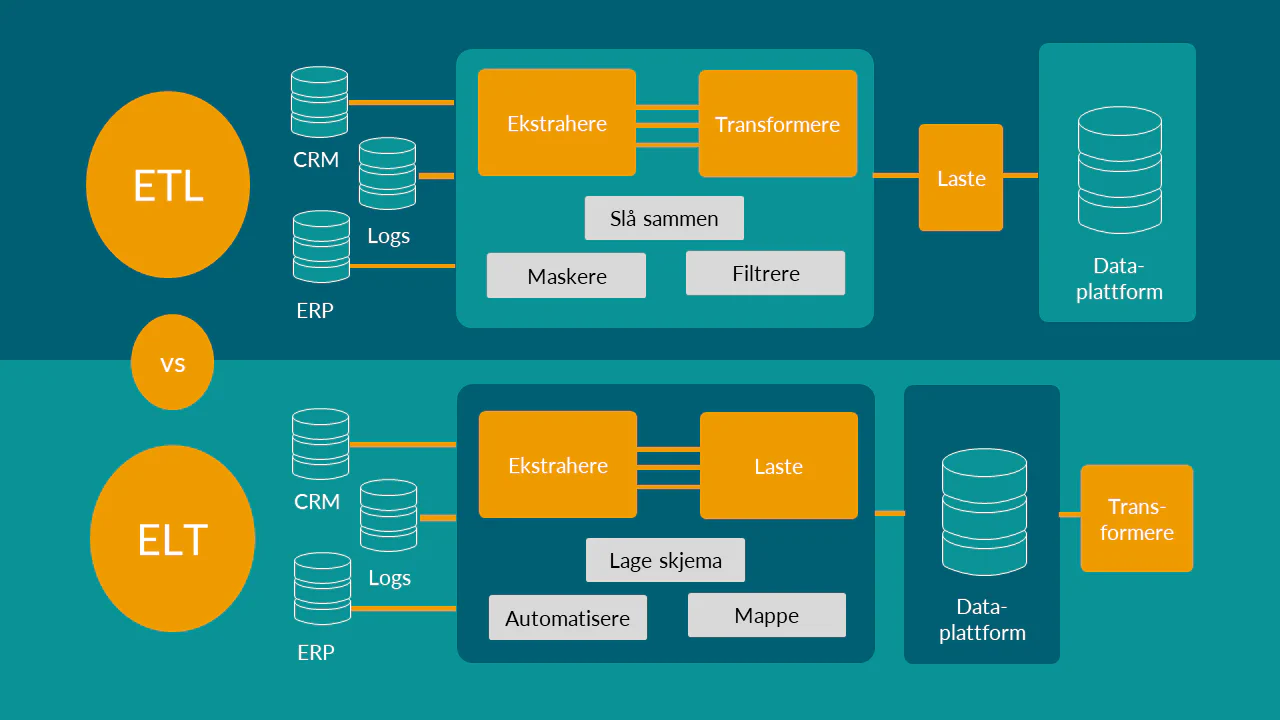

What is ETL and ELT?

ETL stands for “Extract, Transform, Load” and refers to a data integration process where data is extracted from various sources, transformed into the desired format, and loaded into a target system.

ELT stands for “Extract, Load, Transform” and refers to a similar process, but where the transformation occurs after the raw data has been stored. ELT has become popular partly because cloud-based storage and analytics tools have become more affordable and more powerful. This has had two consequences. First, bespoke ETL pipelines are no longer suited to handle the ever-increasing variety and volume of data generated by cloud-based services. Second, companies can now afford to store and process all their unstructured data in the cloud. They no longer need to reduce or filter data during the transformation phase.

The most important reason, however, is that we now want to preserve raw data and model it for the use cases we have. We now perform the “T” transformation after we have extracted and loaded the data. For reporting purposes, we might create transformations for the various layers in a data warehouse based on alignment of use cases and corresponding data modelling. For analytical purposes, we create transformations as needed. The order of the three letters may have changed, but what they mean has not.

Developments in ETL and ELT Tools

There are many tools available today for performing the “E” (extract), “L” (load), and “T” (transform). Some tools cover the entire chain (ELT), while others cover only parts of it (e.g. only T).

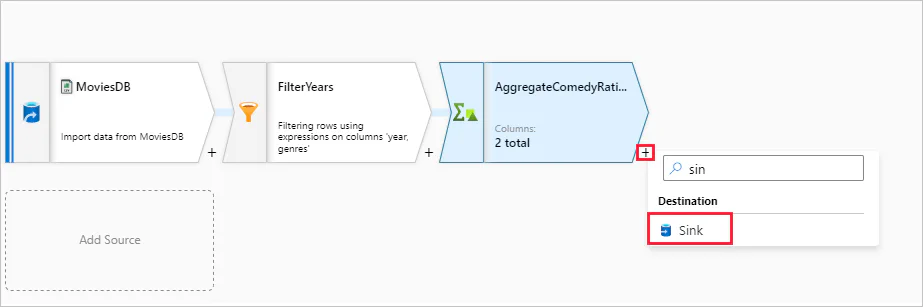

Examples of vendors covering the full ELT spectrum include Informatica, IBM, and Oracle, as well as newer solutions such as Matillion and Talend. The major cloud platforms such as Azure, Google Cloud, and AWS also have their own solutions covering the full range of tasks. Most of these solutions are built around graphical user interfaces and “drag-and-drop”.

We also have newer solutions that specialise in performing one or two of these tasks well. For example, we have solutions like Airflow, Prefect, and Dagster for orchestration (Load). The “E” and “L” have become more sophisticated over time with pre-built connectors for all types of sources and targets.

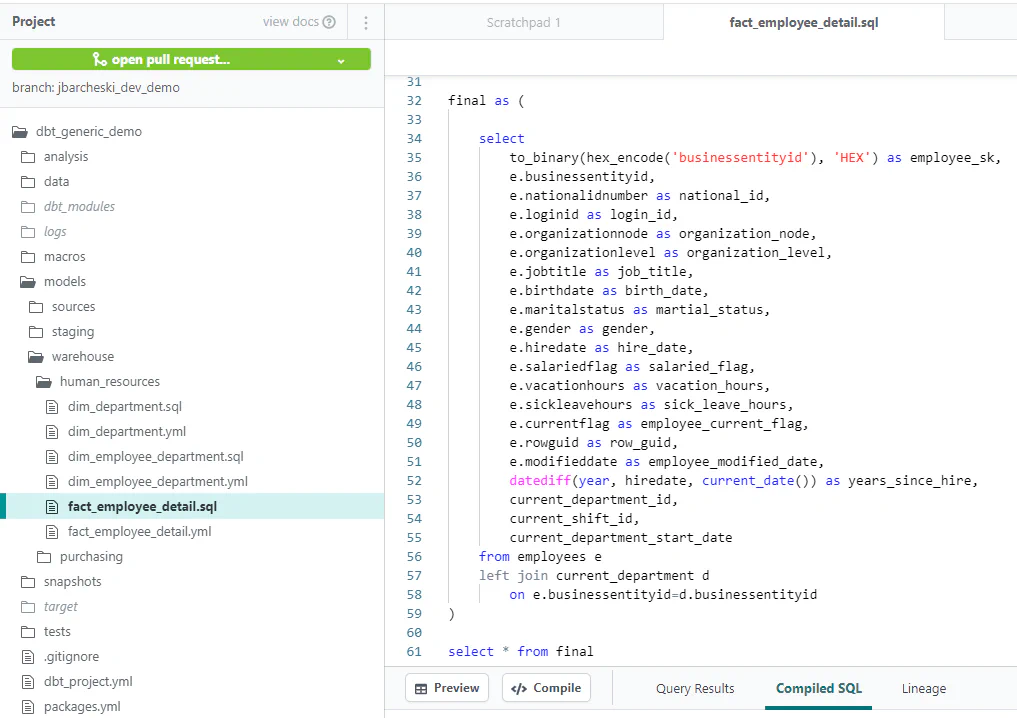

Development in data transformation has been somewhat slower, but in recent years we have seen solutions such as dbt, Delta Live Tables, and Dataform. dbt in particular has gained a large following, including in Norway.

Requirements for Efficient Development and Management of Data Transformations

We believe that all choices should be based on our needs. So what do we want from a transformation tool?

In general, we see that the field of data and analytics has gradually moved towards software engineering. We want the flexibility to build anything, the support to develop as efficiently as possible, and good visibility so that we can manage the logic effectively.

Backend developers in software engineering work in an agile manner based on DevOps principles (you build it, you run it). They are accustomed to all logic being code, to code being reusable, to having control through CI/CD, and not least to being able to automate testing, documentation, and repetitive tasks.

We want the same for data and analytics.

Pros and Cons of GUI vs Code for Data Transformation in the ELT Process

GUI for Data Transformation in the ELT Process

Advantages of GUI

- Requires less training for non-technical users to build basic transformation jobs; more people without SQL experience can use the tools

- Easy to solve simple/small use cases

- Graphical representation is preferred by some

Disadvantages of GUI

- All pipelines must be created “from scratch” and tested/maintained/further developed separately, which becomes more challenging as the project grows

- GUI is limited by the features available and cannot handle complex transformations. The functionality often covers only 70-80% of needs (depending on the tool), and you frequently have to write code on the side

- “Low-code” tools have their own syntax that must be learned

- Not always as well integrated with CI/CD pipelines

- Less portability, higher switching costs

- Limited built-in functionality related to automated testing, orchestration, and documentation

Code for Data Transformation in the ELT Process

Advantages of code

- Provides flexibility to address many transformation needs/use cases (many supported libraries, customisable)

- Easier to maintain (everything in code vs code+GUI)

- Enables more efficient development through automation and code reuse

- AI-based data quality and data transformation that increases developer speed will in the short term be more readily available through code-based tools

- Easy to find technical resources who can code (SQL, Python, etc.)

- Integrates with underlying processing engines, providing portability

- Integrates well with CI/CD pipelines and testing tools

- Often more efficient code/processing

Disadvantages of code

- Code requires users to have a certain level of technical knowledge and may require training if they lack experience with macros, SQL, or Python.

- Coding can take longer than GUI when it comes to simple transformations.

- Requires the team to have resources with deeper technical competence

- More work with initial setup

What Should I Choose for Data Transformation?

Both approaches have their pros and cons, and the choice will depend on your specific needs. “It depends” is, as usual, the answer.

Small organisations with limited technical competence and simple transformations should choose GUI

If you have simple transformations that do not require much technical knowledge, GUI may be the best alternative. GUI provides a simple and intuitive way to perform data transformation, even for non-technical users. It can also save time, as you do not need to write code. Smaller organisations where the use cases are straightforward can comfortably use tools like Data Factory and Alteryx, which represent low-code/no-code.

Larger organisations with technical competence and varied transformation needs should choose code

If you have more complex transformations that require higher accuracy or automation, code may be the best alternative. Code provides more flexibility and precision in the transformation process and offers the ability to automate. Larger organisations with “professional” development environments should use code as much as possible – at least for the official data flows.

Power users in the business line will also largely be able to use code-based tools – and love it. Data flows that are being tested and explored, or that are not critical, can be developed in low-code/no-code tools if desired.

FAQ

What use cases are data transformation tools best suited for?

Data transformation tools are designed to ensure that we create data that is usable for both reporting and analytics. These tools support transformation from raw data to refined data, which is shared across many different user groups and users. Raw data is transformed based on data modelling, which is particularly important for use cases related to data warehouses and reporting.

Is technical knowledge necessary to use code for data transformation in the ELT process?

Yes, it typically requires a certain level of technical knowledge to use code for data transformation in the ELT process. However, fortunately it requires knowledge of programming languages that many people already know – SQL and Python. It is also important to remember that data modelling knowledge is important regardless of the type of tool.

Can we use both code-based and GUI-based data transformation tools?

Yes, large enterprises will likely have multiple tools that do the same thing. If you have the choice, it would be wise to standardise on as few tools as possible. Multiple tools will require additional CI/CD pipeline setup, monitoring, and more, increasing complexity. Not least, multiple tools require duplicated competence.

Who will be the users of a data transformation tool?

The users of data transformation tools, both GUI-based and code-based, will be data engineers, analytics engineers, BI developers, and business analysts.

Should data scientists use a data transformation tool?

Partially. Data scientists who use methods such as machine learning and neural networks need other tools to process data. They often use notebooks, such as Jupyter, to work step by step with code and documentation, typically in Python.

When they have determined that the analyses provide value and should be put into production, we recommend moving the general modelling and data transformation that other use cases can benefit from into the shared codebase managed by the data transformation tool. This can be done by data engineers, ML engineers, or data scientists.

Data scientists also often assist in the development of data flows and reporting. In that case, they must follow the principles, processes, and tool choices that apply to this type of use case, regardless of job title.

Which tools are most commonly used for code-based data transformation?

Right now, these tools are the most popular for code-based data transformation:

- dbt (dbt Labs) (not tied to a specific data platform or cloud service)

- Delta Live Tables (only on Databricks as a processing engine)

- Dataform (only on Google Cloud)