Data Platform | The Components of a Data Platform

01.07.2024 | 24 min ReadCategory: Data Platform | Tags: #data platform, #AI, #architecture

Learn about the core components that make up a modern data platform, and understand how they work together to collect, store, process, and present data. We explain how a data platform enables various use cases from data sources to consumption.

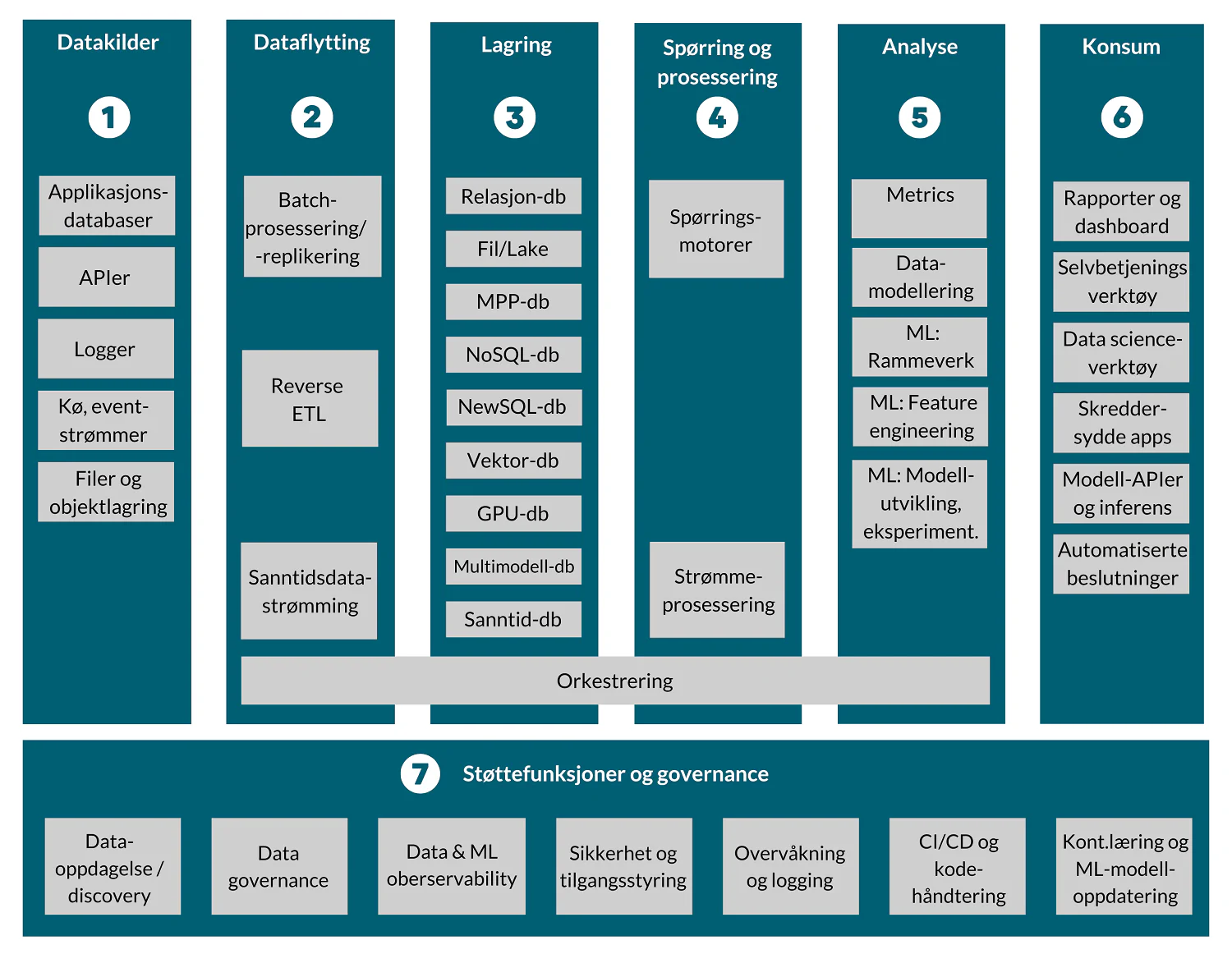

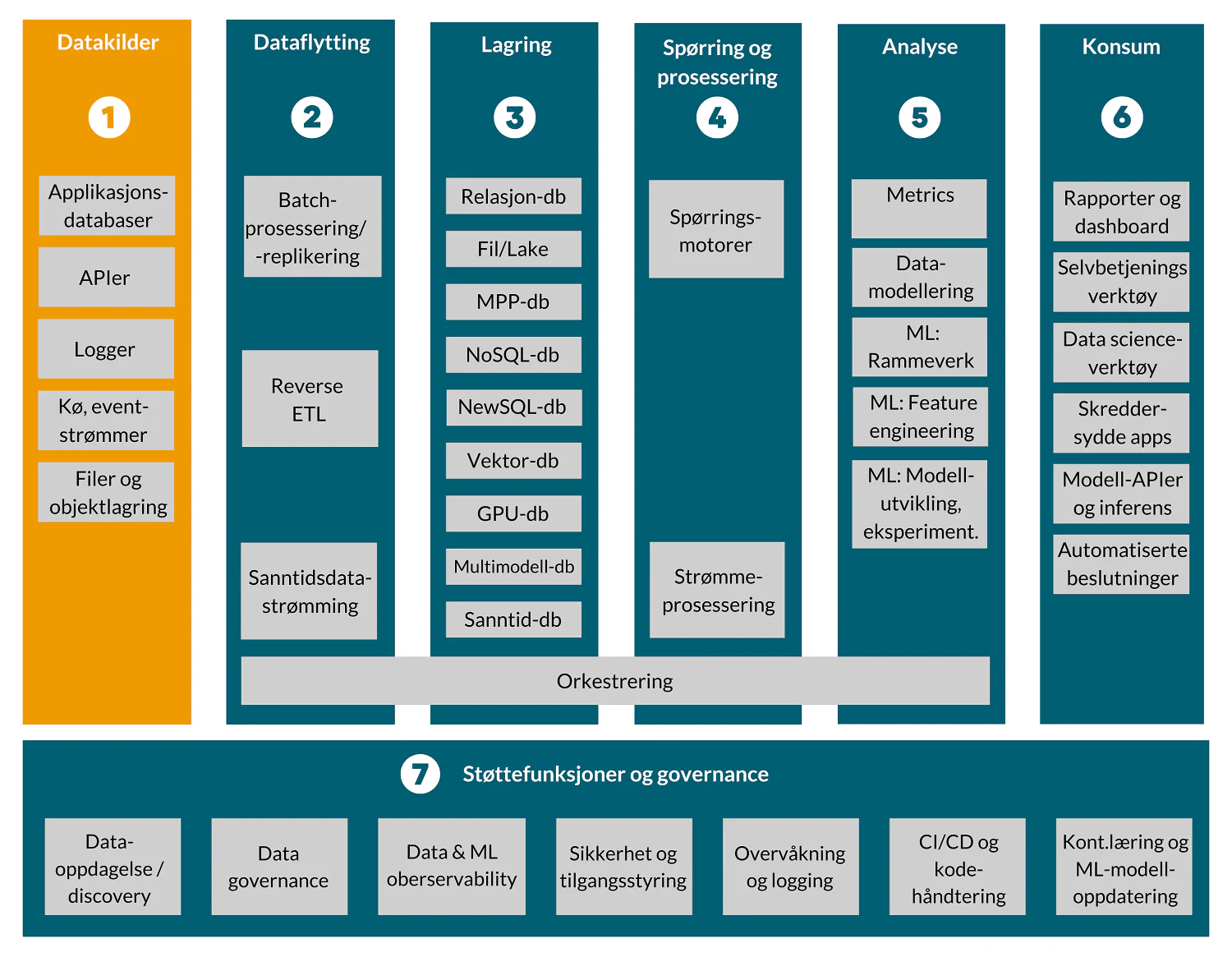

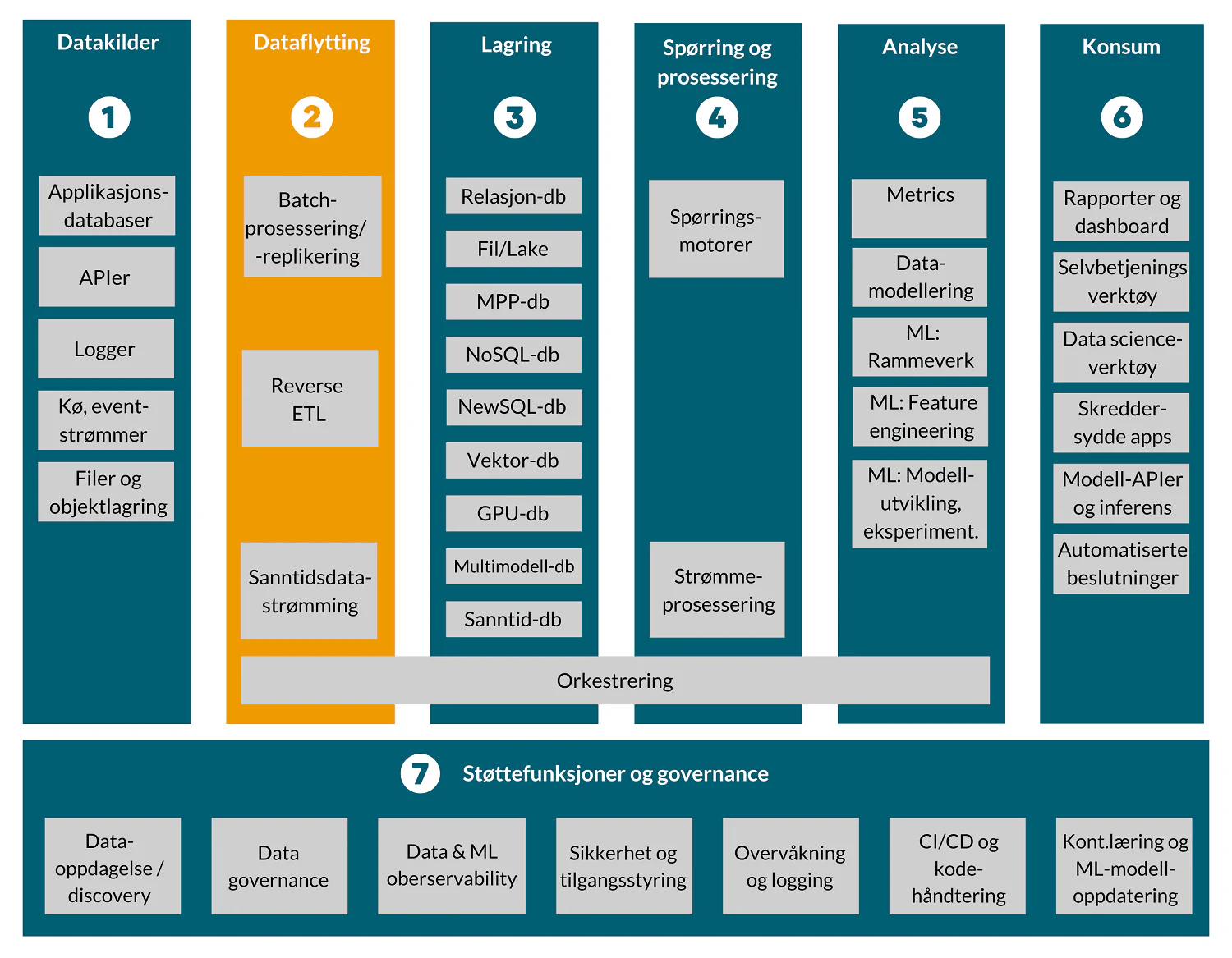

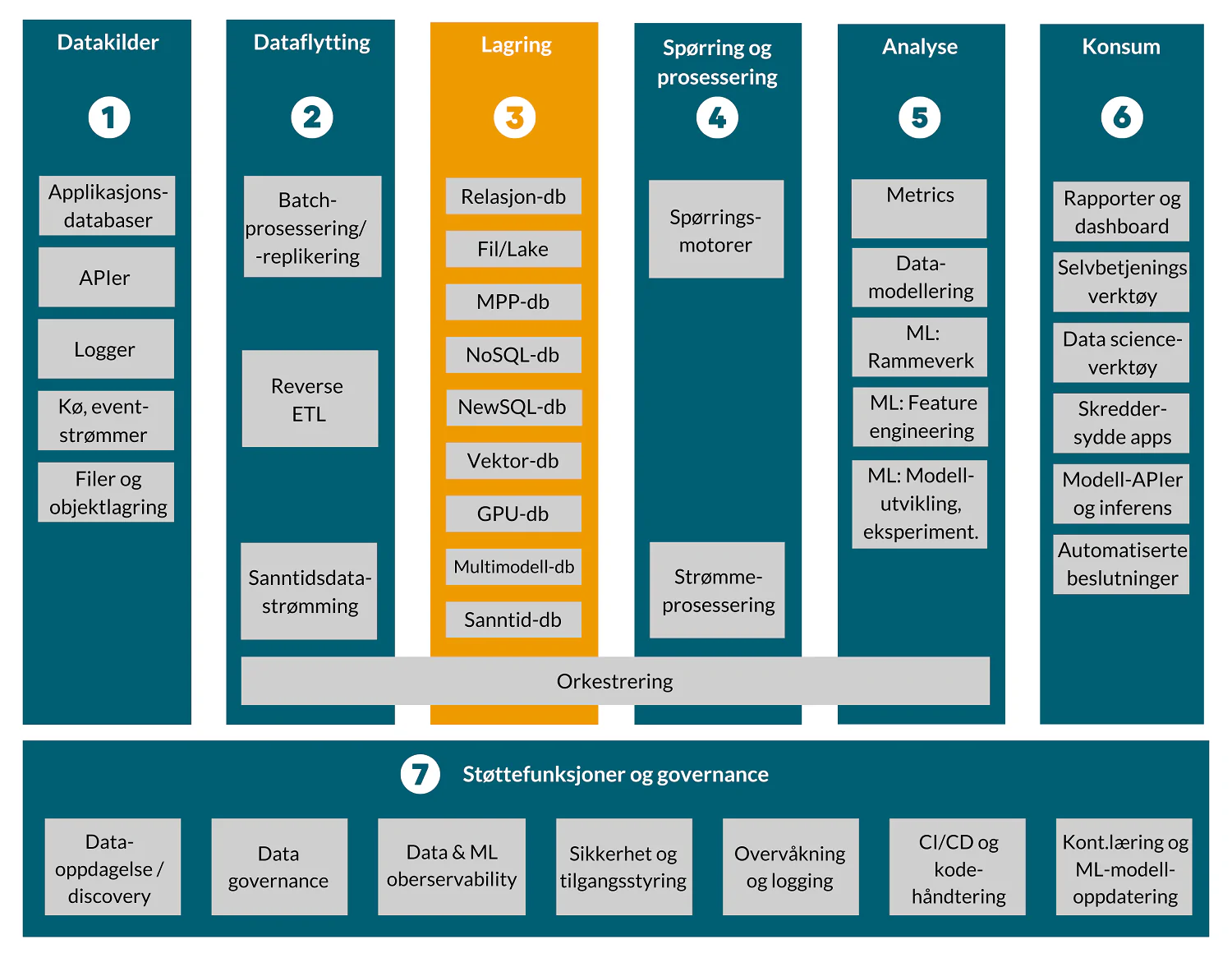

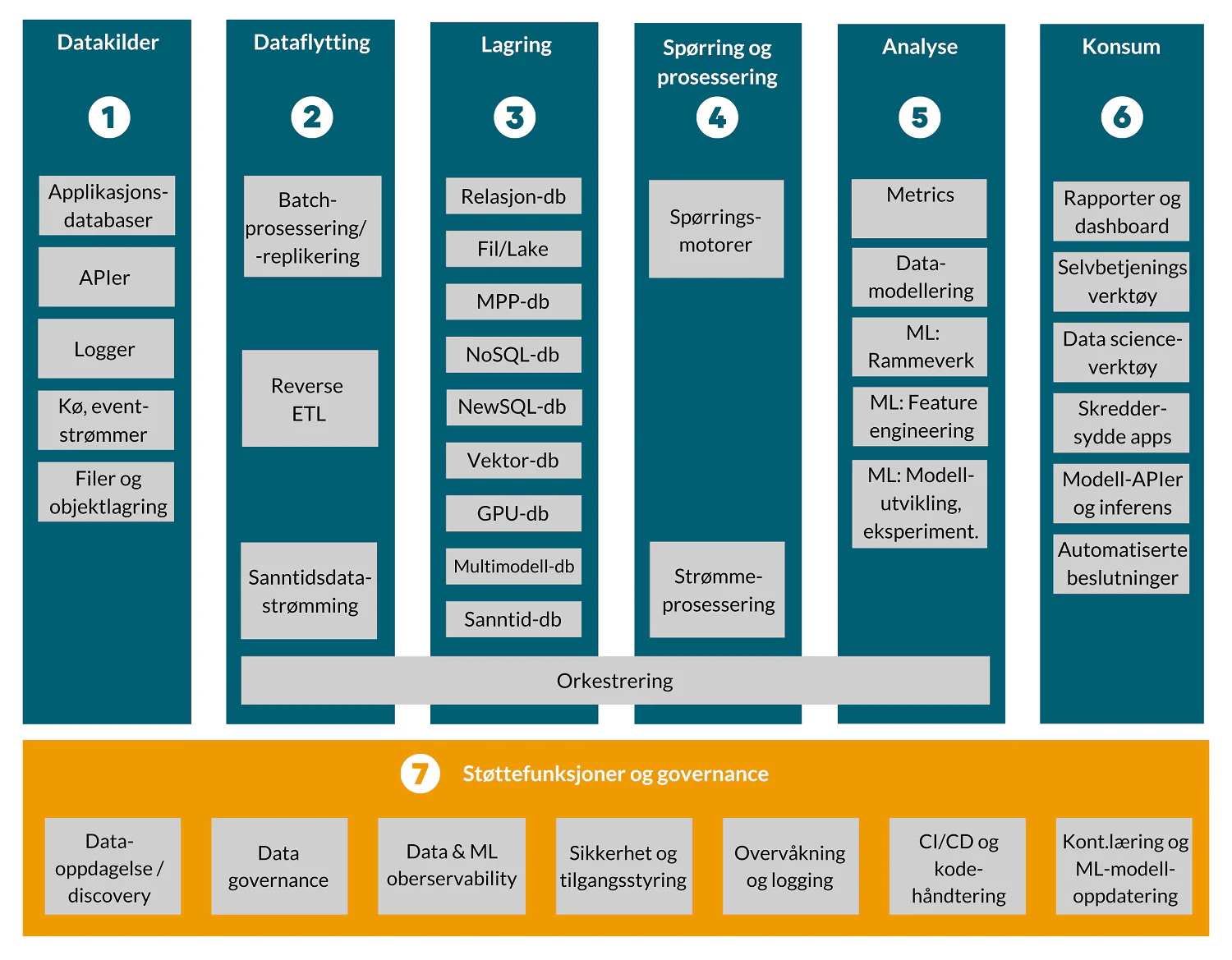

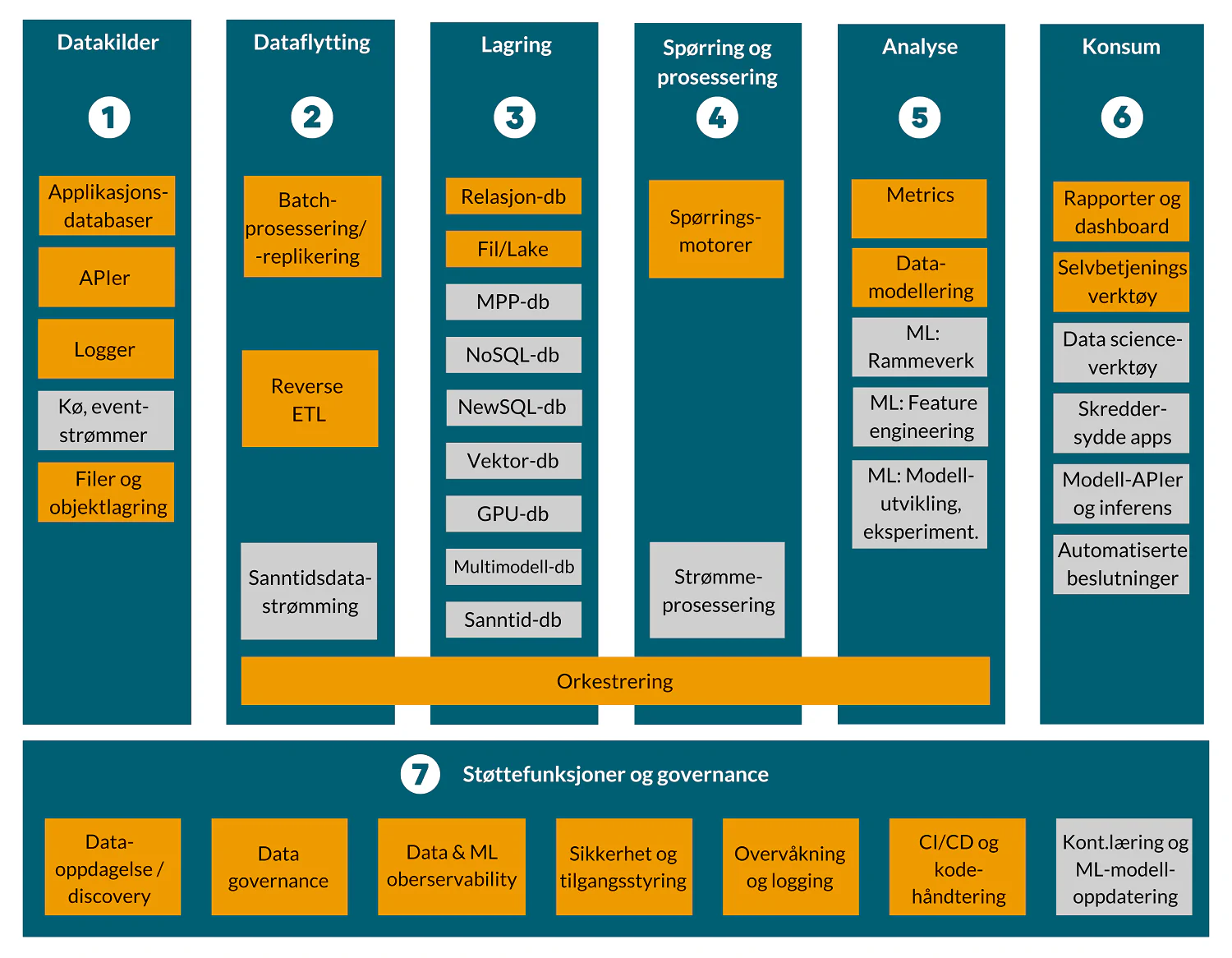

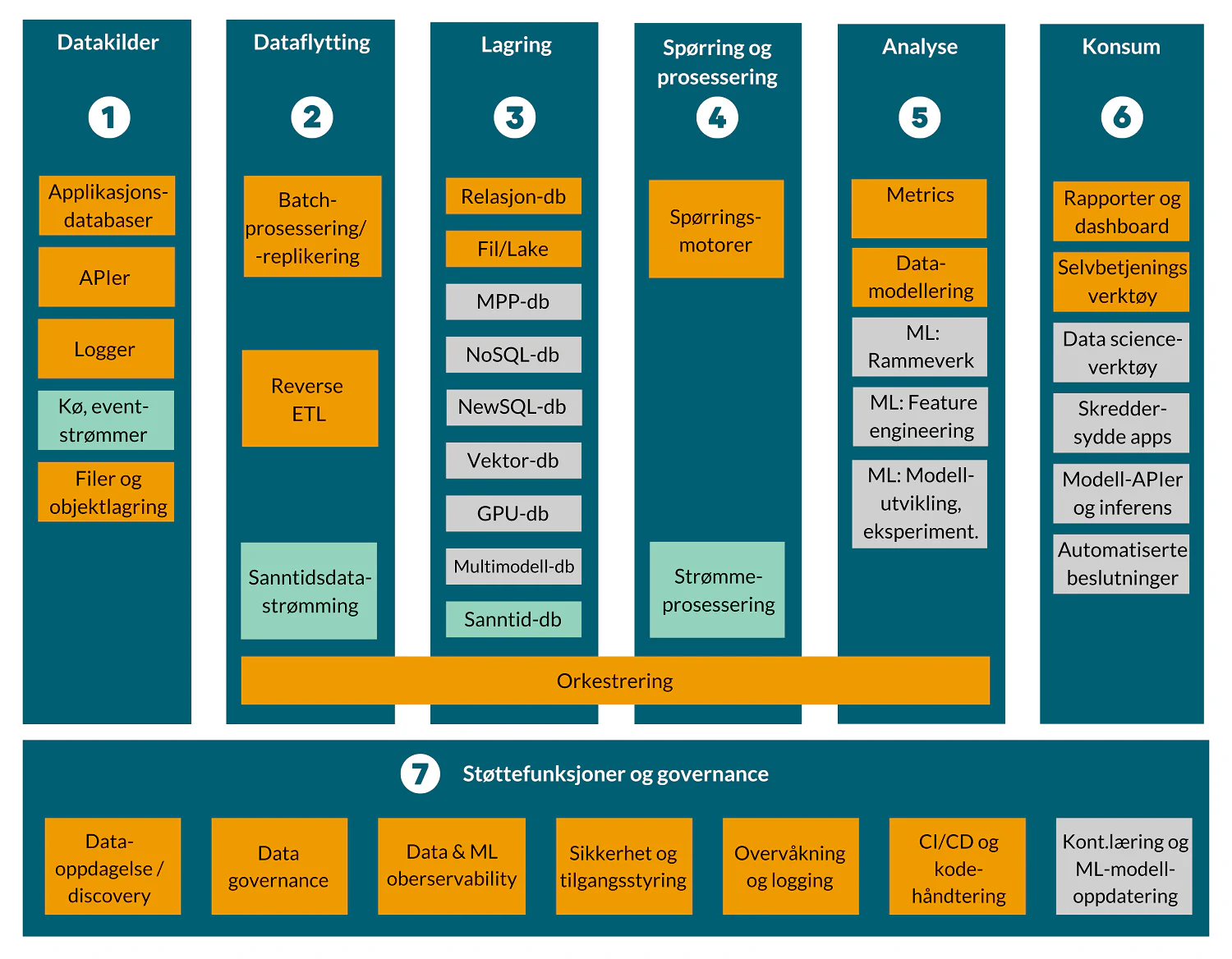

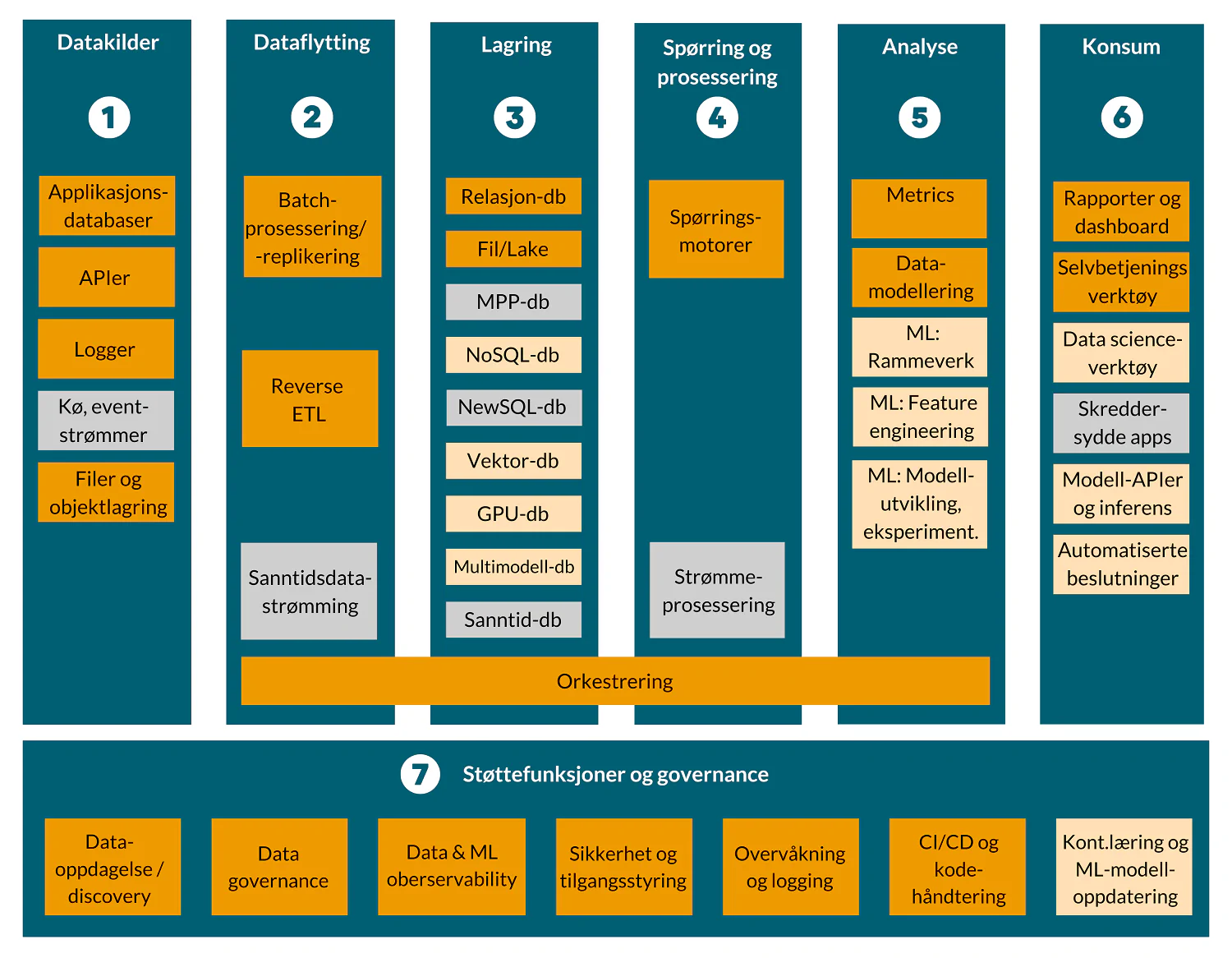

The Helicopter View: Components of a Data Platform

A data platform broadly consists of seven distinct components, as illustrated here.

- Data Sources: Provide data about relevant events, business transactions, master data, reference data, etc.

- Data Movement: Extracts data from operational systems and other data sources. Delivers data to the storage service in a schema compatible with source and target, at a specified frequency, loading method, and time.

- Storage: Stores data in a format suitable for queries and processing systems. Optimizes for low cost, scalability, and analytical workloads (e.g., columnar storage).

- Querying and Processing: Provides interfaces for data engineers, analysts, and data scientists to execute queries against stored data and leverages parallelization (distributed compute) for faster response times. Enables describing what has happened and predicting what will happen. Supports machine learning and AI-based solutions.

- Analytics: Transformed data refined into structures better suited for specific analyses.

- Consumption: Presents the results of queries and data analyses to users or as interfaces to other applications.

- Support Functions and Governance: Ensures coordination of data flows, execution of queries, and transformations across the entire data pipeline across all layers. Ensures data quality, performance, and governance for all systems and datasets.

If you look even more closely at the individual components, it can look something like this:

1. Data Sources

Data sources provide us with raw data. Data is created in operational systems that drive the business, often as descriptions of transactions. Data is delivered in various formats, detail levels, and frequencies.

Modern enterprises with a data-driven application portfolio have systems that generate data frequently, such as clickstream from websites and apps, and event logs with continuous data streams from sensors. Data sources can also be external parties providing market data, weather data, and financial data.

Data sources are rarely built to deliver data outward, and the way they deliver data varies. We therefore use the term disparate sources or disparate data to describe the challenge of combining data across sources and business areas.

The most important categories of data sources are:

| Data Type | Description |

|---|---|

| Application databases | Structured data sources that store data generated by the organization’s applications. This includes relational databases such as MySQL, PostgreSQL, and SQL Server, where data is organized in tables with rows and columns. These databases often contain transaction data, customer information, product data, and other important business information. |

| APIs | Provide programmatic access to data and functionality in applications and services. APIs can fetch data in real time, such as financial data, weather data, and social media data. APIs are often RESTful or SOAP-based and return data in formats such as JSON or XML. |

| Logs | Text-based files that record events in a system, including server logs, application logs, and user behavior logs. Logs are invaluable for troubleshooting, security monitoring, and system performance analysis. |

| Queues/event streams | Used to process and transfer real-time data between systems. They are useful for applications that require real-time analytics and responses, such as IoT devices, financial transactions, and user interactions. |

| Files and object storage | Encompass various file formats such as CSV, JSON, Parquet, and storage systems such as AWS S3, Google Cloud Storage, and Azure Blob Storage. These store data in a variety of formats and are often used for batch processing and storing large data volumes. |

2. Data Movement / Data Integration

Data movement, also called “ingest,” is essential in a modern data platform. The process ingests data from various sources and formats, standardizes them, and prepares them for further processing and analysis. The goal of data movement is to make data ingestion as automated and seamless as possible, so that data can be quickly transformed and analyzed.

Main methods for data movement include:

- Batch processing: Processes large data volumes at specific times. Useful for sources that are updated periodically, ensuring efficient transfer and processing.

- Real-time data streaming: Processes data continuously as it is generated, such as sensor data. Important for applications requiring immediate updates and fast response times.

- Reverse ELT: Integrates changes in master data (e.g., product attributes) back into systems that need them.

- Orchestration: Coordinates and streamlines the execution of various data operations. Orchestration tools automate workflows, manage dependencies, and ensure that data movement occurs in the correct order and at the right time. This includes the use of Directed Acyclic Graphs (DAGs) spanning from raw data to transformed data, including machine learning.

3. Storage

Storage technology is used both to store data in raw format upon ingestion and for storage of data that has been processed to varying degrees.

The storage layer in a data platform aims to offer various types of storage technologies that support requirements for response time, availability, uptime, failover, and disaster recovery, among others. For most organizations, cloud storage technology will be the most relevant choice, as it offers cost-effective and scalable capacity.

Depending on the intended use, a data platform will typically contain the following database technologies within the storage services:

| Storage Type | Description |

|---|---|

| Relational databases (RDBMS) | Relational databases are traditional databases for structured data from transaction-oriented source systems such as ERP systems, bank transactions, and flight reservation systems. These are often used to build data warehouses. |

| File/Lake storage | Hadoop Distributed File System (HDFS) is a storage method that uses a distributed file system, distributing files among a number of machines with associated storage to accelerate read and write operations. Often referred to as a “Data Lake,” where large amounts of raw data can be stored cost-effectively. |

| MPP databases | Massive Parallel Processing (MPP) databases are a special type of relational database where data is processed on multiple computers with associated storage. These are designed to handle queries requiring enormous data volumes and are not efficient for many small queries. |

| NoSQL databases | NoSQL databases do not use tables to organize data like relational databases do. They were introduced to address some of the relational database’s shortcomings regarding scaling and flexibility in the data model. Subtypes include document-oriented databases, key-value pairs, graph databases, and columnar databases. |

| NewSQL databases | NewSQL databases combine the advantages of traditional relational databases with the scalable properties of NoSQL databases. They are designed to handle high transaction volumes with ACID properties. |

| Vector databases | Vector databases store data in the form of vectors, which is particularly useful for machine learning applications that require fast access to high-dimensional data. |

| GPU databases | GPU databases use graphics processors to accelerate data computation tasks. They are ideal for applications requiring high performance and fast processing of large data volumes. |

| Multi-model databases | Multi-model databases support multiple data models (e.g., relational, graph, document) in a single database, thereby providing flexibility to handle different data types and workloads. |

| Real-time databases | Real-time databases are optimized to provide immediate responses to queries and are used in applications requiring fast access to up-to-date information. |

Here are important properties you should consider when choosing a storage service:

- Response time: Different storage technologies are optimized for different response time requirements. For example, in-memory databases provide fast access, while HDFS is optimized for high throughput of large data volumes.

- Availability and uptime: Storage systems must be available and reliable to ensure continuous access to data. This includes mechanisms for failover and disaster recovery to protect against data loss and downtime.

- Scalability: Storage systems must be able to scale to handle increasing data volumes without losing performance. This is especially true for systems like HDFS and NoSQL databases that are designed for horizontal scalability.

- Cost-effectiveness: The choice of storage technology affects the costs of the data platform. Cloud-based storage services often provide a cost-effective solution that can be adapted as needed.

Choosing the right storage technology is critical for an effective data platform. By combining different storage technologies, a data platform can meet varied requirements for response time, availability, scalability, and cost-effectiveness.

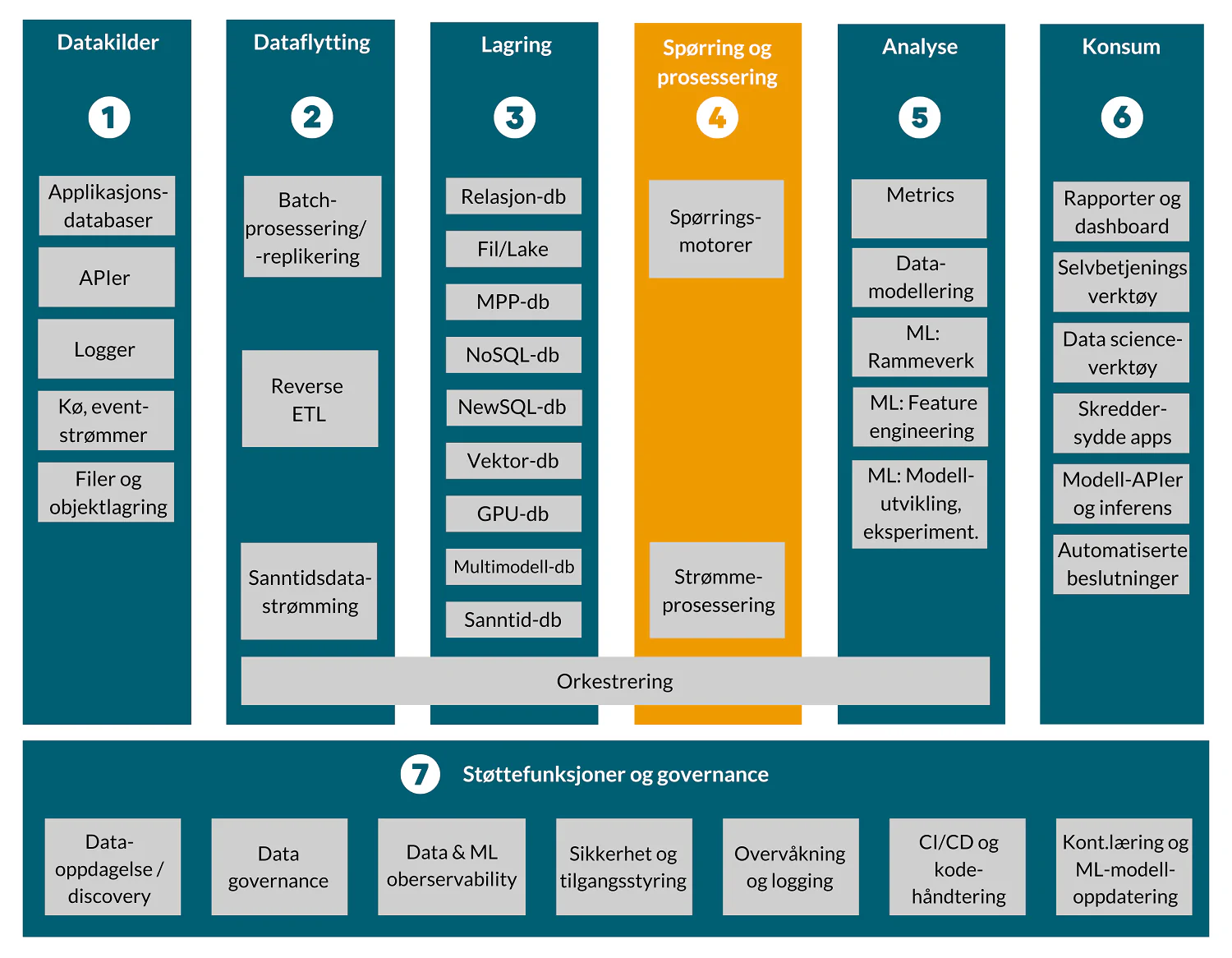

4. Querying and Processing

The data platform contains multiple areas with different purposes. Upon receipt, data is stored approximately the same as in the source, and is then refined into models for general analytical use, or models specialized for use in analytical use cases such as machine learning algorithms.

The processing layer’s main purpose is to coordinate and process the transformations of data loaded into the data platform, which is refined into the data models that the data platform contains and that are prepared in the analytics and consumption layers.

Processing technology is divided into batch data processing and streaming data processing, i.e., data that arrives continuously and at very high frequency:

- Batch processing with SQL and Spark-based query engines: Batch processing involves processing large amounts of data at specific times. This is useful for tasks that do not require real-time results, such as nightly data updates, monthly reporting, and historical data analysis. For most analytical needs, batch processing at a frequency of, for example, 15 minutes, 60 minutes, or once a day is sufficient.

- Stream processing: For scenarios where there is a need for analytical insight in near real-time, processing of streaming data with low latency will be required.

Important aspects of using queries and processing capabilities include:

- Parallel and distributed computing: By leveraging parallel and distributed computing, the data platform can handle large data volumes and complex queries faster. This involves using multiple processing units working simultaneously to complete tasks.

- Query optimization: Query optimization is the process of improving query efficiency to reduce processing time and resource consumption. This can include techniques such as indexing, query rewriting, and the use of materialized views.

- Data transformation: Transformation involves reshaping raw data into a structured format suitable for analysis. This can include aggregation, filtering, sorting, and enrichment of data.

- Machine learning and AI: The data platform often supports advanced analytics such as machine learning and artificial intelligence. This requires extensive processing to train models, run predictions, and evaluate results. Model training in particular requires significant computing power, especially for large datasets and complex models such as deep neural networks. Scalable infrastructure, such as distributed computing systems and GPU clusters, is necessary to handle these requirements.

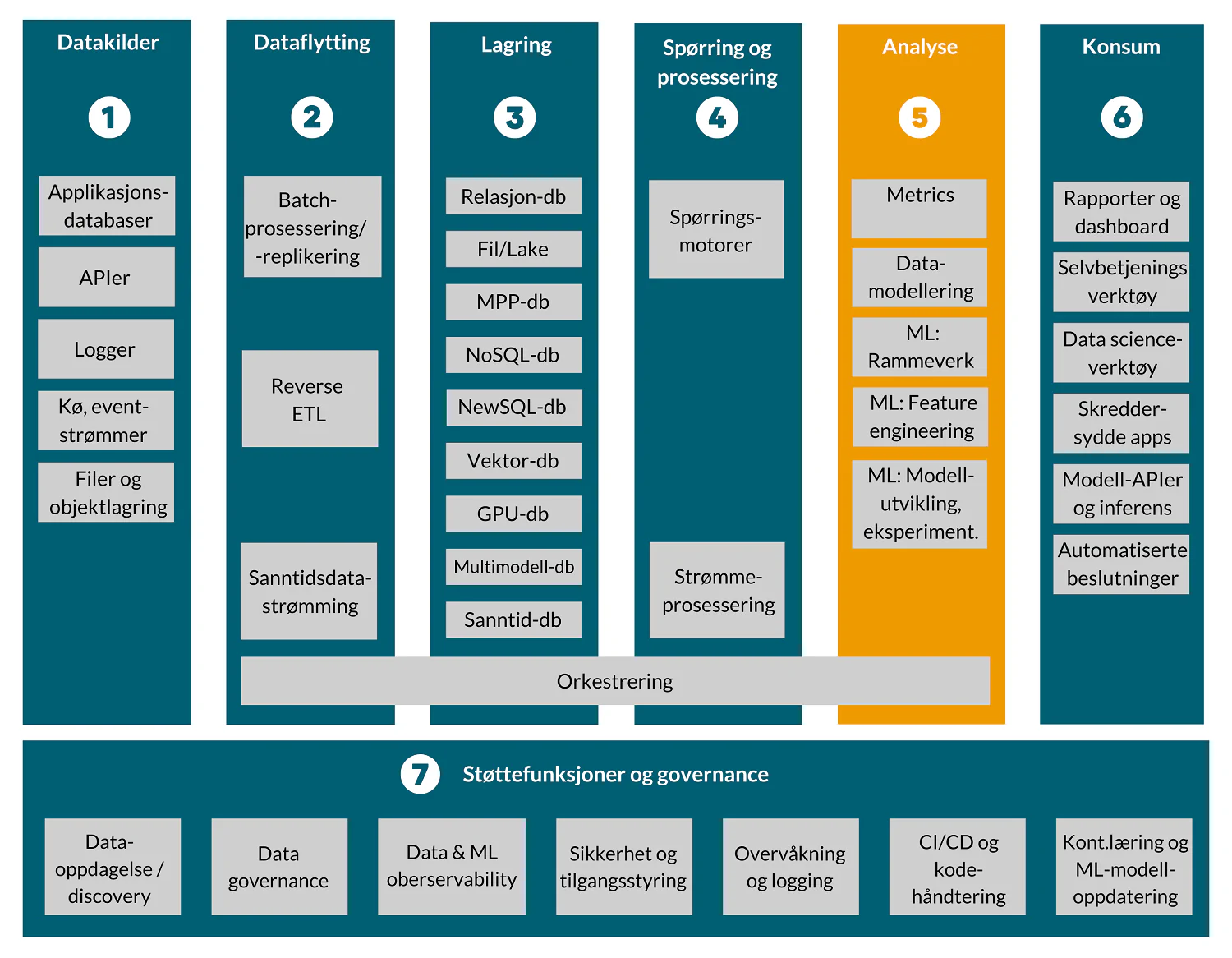

5. Analytics

The purpose of the analytics layer is to provide quality-assured analytical tables for use in various types of tools in the consumption layer. This layer is critical for transforming raw data into insightful, actionable data that can drive business decisions. Analytics involves data modeling, metrics layers, enrichment, aggregation, and various types of analytical techniques.

Main components in the analytics layer typically include:

Data modeling: Data modeling involves structuring data in a way that makes it easy to understand and analyze. This includes building dimensional models, star schemas, and snowflake schemas that make it easier to perform analyses and reporting. Aggregation collects and summarizes data to provide an overview of larger data volumes. This can include calculating averages, sums, counts, maximum, and minimum values.

Metrics layer: The metrics layer functions as a semantic layer where business logic and definitions are standardized. This layer ensures that all users in the organization use the same definitions for key metrics and measurement parameters, which promotes consistency and accuracy in reporting and analysis. Product profitability for an item should, for example, be the same whether it is displayed in Power BI, in a dashboard in a web portal, or when it is imported as a metric in a data science notebook. An implementation of a common set of definitions in this layer is also called a semantic layer.

Machine learning and AI: Machine learning and artificial intelligence (AI) are used to discover patterns in data, automate decision-making processes, and build predictive models. This includes the use of techniques such as neural networks, decision trees, and cluster analysis.

Data preparation and feature engineering are critical steps in the machine learning process. This involves cleaning, transforming, and structuring data to make it ready for modeling. Feature engineering is about creating new variables (features) from raw data that can improve model performance, and can advantageously be created as a logical layer on top of your data.

Model development and experimentation involve using tools and platforms to build, test, and evaluate machine learning models. This includes experiment tracking to track and compare results from different model trainings and hyperparameter tuning.

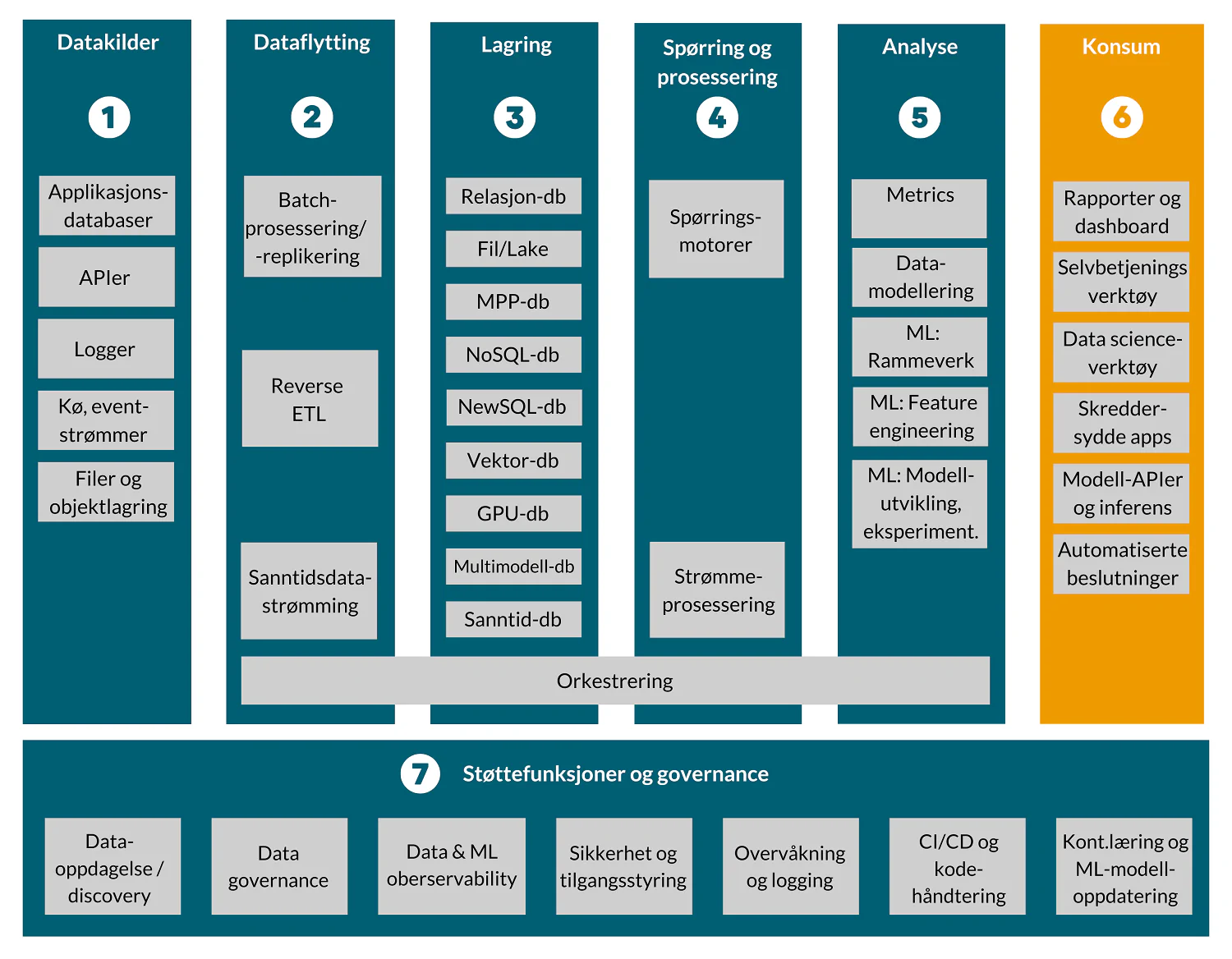

6. Consumption

The role of the consumption layer is to present data tailored to different use cases and user groups, so that data can be converted into information that is then used to create value. The consumption layer contains tools ranging from simple presentation of standardized reports to machine processing using advanced machine learning algorithms and artificial intelligence.

Consumption capabilities often include:

- Fixed reporting and dashboards: Fixed reporting and dashboards provide instant and up-to-date access to data and information, often visualized in graphs, charts, or KPI indicators. Dashboards provide a quick overview of key metrics or trends that help decision-makers understand business status quickly.

- Self-service analytics tools: Self-service tools give end users the ability to extract, analyze, and visualize data on their own, without requiring significant technical knowledge. These tools promote data exploration and analysis in a simple and intuitive way.

- Data Science and AI tools: Data Science and AI tools are advanced tools designed for data analysis, statistics, machine learning, and artificial intelligence. They are used to build, train, and deploy models to predict, classify, or recognize patterns in large datasets.

- App frameworks and custom applications: App frameworks and custom applications give developers the ability to build tailored applications for specific business needs or processes. These applications can visualize data in unique ways and integrate data analytics into business processes. Examples of frameworks include React, Angular, and Django.

- Model APIs and inference services: Model APIs and inference services make it possible to integrate machine learning models directly into applications and workflows. This includes RESTful APIs for model predictions and batch inference for large data volumes.

- Automated decision systems: Automated decision systems use AI and machine learning models to make decisions without human intervention. This includes systems for automating complex decision-making processes in real time.

Historically, the tools in the consumption layer have been characterized by requiring people with specialized expertise to develop reports that combine good visual and pedagogical presentation with the correct metrics. In recent years, and especially with the launches of tools like Tableau, Qlik, and Power BI, the self-service concept has gained momentum.

User-friendliness and self-service are critical for scaling insight from being a niche competency in the organization to becoming a natural part of all employees’ work processes. As part of the democratization of data, the consumption layer has also increasingly evolved toward being an area or portal for data sharing, sharing analytical results, and collaborating on developing insights.

7. Support Functions and Governance

Overview of Support Functions and Governance

Support and governance tools help answer questions such as what data exists, where it comes from, how it has been processed, how sensitive it is, and who should have access. In addition, tools for monitoring operational processes, logging events, and ensuring data quality are central to effective operations and management.

In this part of the data platform, we can categorize the tools into the following groups:

- Data Governance

- Data Discovery

- Data Observability

- Security and Access Control

- Monitoring and Logging

- CI/CD and Code Management

Data Governance

Over time, we see a trend where the tools consuming data from the data platform have gained increasingly broader scope, more users, and cover more user environments.

This makes it increasingly important to have a shared overview of reusable definitions so that the organization can establish a common vocabulary. It is important to be able to programmatically track which data is used where, and by whom.

Data Governance is a discipline that involves having clear decision structures and distribution of responsibility to guide the use of data to create value. Data Governance is important for the data platform, but it is a discipline that extends from the data sources all the way to the specific use cases realized outside the data platform itself.

Typical functions related to Data Governance in a data platform include:

- Metadata management: Managing and documenting metadata for better data understanding and use.

- Documentation in code: Ensuring that code and data analyses are well-documented for transparency and reuse.

- FinOps: Overview of costs related to data processing and storage.

- DataOps: Overview of data flows, what has been run and stored.

- Data Lineage: Tracing data from source to consumption to understand data origins and transformations.

- Security and compliance for AI: Includes guidelines and procedures to ensure that AI models operate within legal and ethical frameworks.

Data Discovery

Data Discovery describes the processes and tools used to discover, scan, and describe the datasets that exist in the organization. Data Discovery combines search capabilities, automatic scanning, cataloging, and modeling with people’s domain knowledge to communicate the knowledge necessary to scale up the use of analytical insight. This enables users to find relevant data quickly and efficiently, which in turn supports data-driven decisions and insights.

Typical functions related to Data Discovery in a data platform include:

- Data cataloging: Creating a searchable catalog of available data for easy access and overview.

- Data profiling: Analyzing datasets to understand their structure, content, and quality, thereby identifying relevant data for specific needs.

- Search and queries: Facilitating search functions and queries so that users can quickly find and retrieve data.

Data Observability

Data observability is a collective term for the ability to observe and manage data quality across applications in the organization. Observability as a concept comes from the DevOps mindset in software development, where automated monitoring of data is used to evaluate data quality.

Data observability includes both tools for monitoring and data quality management, as well as more advanced functionality such as machine learning algorithms for identifying abnormal values, changes in data patterns, and schema changes. It is common to divide data observability into five pillars:

- Volume: Ensures that the content is complete. For example, if 2-3 million rows are normally received per hour, receiving only 100,000 rows may indicate problems.

- Freshness: Monitors whether the data is up-to-date and identifies any gaps or breaks in continuity.

- Distribution: Checks whether values are within the expected range of variation.

- Structure/schema: Tracks changes in the dataset’s formal structure, including what has changed and when the changes occurred.

- Lineage: Maps the data’s origin and processing steps through the system.

These pillars are almost identical to what we in data quality refer to as data quality dimensions, and show how central data quality is to data observability.

Security and Access Control

Security and access control consists of technology and, perhaps most importantly, good processes and routines to protect data against unauthorized access, leaks, regulatory violations, and breaches of ethical guidelines throughout the lifecycle.

Managing security is often complicated by many environments. A modern data platform typically uses a unified security platform rather than multiple different solutions. Such a platform makes it easier to standardize security across cloud platforms and reduces manual processes.

A modern security platform can centralize security rules and apply them in one or more cloud environments, such as Azure Data Lake, Google BigQuery, or Snowflake, without the rules having to be rewritten and adapted for each data platform.

Key functions in a security platform include:

- Automated discovery of sensitive data: The tool scans datasets and classifies or suggests classification of sensitive data such as social security numbers, payment information, etc.

- Tracking data usage and access: Monitors who uses data and how, so that abnormal activity can be detected.

- Automated policy enforcement: Synchronization of metadata with data catalogs or identity management systems, enabling automated access control.

- Standardization of security protocols: Applies centralized security rules across different cloud environments, ensuring consistency and reducing risk.

- Reduction of manual processes: Automates security tasks to minimize human errors and increase efficiency.

Monitoring and Logging

Monitoring and logging are critical components in a data platform to ensure stable operations, identify problems quickly, and maintain high performance. These functions provide insight into the system’s state and help detect anomalies and error situations in real time. Typically, you start with basic monitoring and logging, and then expand it as risk areas emerge.

Typical functions in monitoring and logging include:

- Real-time monitoring: Continuous monitoring of system performance and state to ensure optimal operations and rapid identification of problems.

- Event logging: Detailed logging of all system events, including errors, warnings, and information about data processing processes.

- Alerts and alarms: Automated alerting for anomalies or problems to enable rapid response and problem resolution. This can, for example, be something as simple as automating the sending of emails, SMSs, or Teams messages.

- Log data analysis: Use of log analysis tools to identify patterns, trends, and root causes of problems.

Continuous Learning and ML Model Updating

Continuous learning and model updating are critical processes to ensure that machine learning models remain accurate and relevant over time, especially in dynamic environments where data and business conditions are constantly changing. This process includes several key aspects:

- Model monitoring: Continuous monitoring of model performance in production involves tracking key metrics such as accuracy, precision, recall, and F1 score, as well as identifying deviations or degradation in performance. By monitoring the model in real time, problems can be quickly detected and addressed.

- Automated training pipelines: Automation of model training and updating is essential for maintaining efficiency and scalability in model administration. This includes tools and platforms that automate the entire training process, from data preparation to model training, validation, and distribution.

- Model versioning: Model versioning involves documenting each version of the model, including training data, hyperparameters, algorithms, and performance metrics, to keep track of different versions and their performance.

- Automated retraining: Regular retraining of models with new data is necessary to adapt to changes in data and environment. Automated retraining processes use new data to update models, ensuring they remain relevant and accurate.

- Data-driven feedback loop: A continuous feedback loop from the production environment back to the training environment is essential for continuous learning. This involves collecting and analyzing new data, user interactions, and other relevant signals to improve model performance over time.

- Compliance and ethics: Continuous learning must also take into account legal and ethical aspects. This involves ensuring that models operate within legal frameworks, maintain privacy, and avoid biases that can lead to unfair or discriminatory results. Regular auditing and adjustment of models ensures compliance with applicable regulations and ethical guidelines.

CI/CD and Code Management

Continuous Integration (CI) and Continuous Deployment (CD) are important practices brought to the data domain from modern software development. These practices ensure that code is in a constant state of integration, testing, and deployment, resulting in faster development cycles and higher quality.

Typical functions in CI/CD and code management include:

- Version control: Use of tools such as Git to manage and version codebases, enabling collaboration and traceability.

- Automated building: Automated build processes such as compilation and testing of code to ensure it works as expected.

- Automated testing: Integration of test scripts that run automatically upon code changes to validate functionality and identify bugs early.

- Continuous deployment: Automation of the deployment process so that changes can be quickly put into production after approval.

- Environment management: Administration of different development, test, and production environments to ensure consistency and stability across deployments.

Examples of Typical Architecture Patterns

Data Lakehouse with Batch Integration

Data Lakehouse combines the properties of a data lake and a data warehouse, using batch integration to extract, transform, and load data.

- Data sources: Data is fetched from various sources, such as transaction systems and logs.

- Batch processing: Data is loaded into the data lake in batches at specific times.

- Data Lakehouse: Combines raw data from the data lake with structured data organized further in data warehouse layers.

- Analytics and reporting: Users perform queries and analyses on the data for insight and decision support.

Analytical and Operational Data in Hybrid

This architecture pattern integrates both analytical and operational data in a hybrid environment to support real-time decisions and deeper analyses.

- Operational data sources: Real-time data from operational systems such as CRM and sensors, in combination with data fetched in batches.

- Hybrid data storage: Data is stored both in real-time databases and in databases for historical analysis.

- Deep analysis: Batch processes for historical trends and predictive analysis, often in combination with real-time dashboards and simple rules for anomaly alerting.

Machine Learning in Development and Production

This pattern focuses on machine learning from experimentation to production, with separate environments for development and production.

- Data collection: Data is collected from various sources and stored as raw data.

- Data preparation: Data is cleaned and transformed for use in machine learning.

- Feature store: Stores and reuses features across different projects, preferably as a logical layer on top of the raw data.

- Model development: Data scientists experiment and train models in a development environment.

- Model deployment: Approved models are deployed to the production environment.

- Model monitoring: Continuous monitoring and updating of models to ensure accuracy.

The Way Forward

Through this guide, we have covered the most important components of a data platform, including data sources, data movement and data integration, storage, querying and processing, analytics, consumption, and support functions and governance.

Remember that a data platform is not static; it evolves continuously to meet changing needs and technological advances. Implementation of advanced features such as orchestration, data observability, and automated model updates are examples of functionalities that many have not yet fully implemented.